The effect of Horizontal Field of View on Stereovision-based Visual Homing: Data repository

D.M. Lyons, B. Barriage, L. Del Signore,

Abstract of the paper

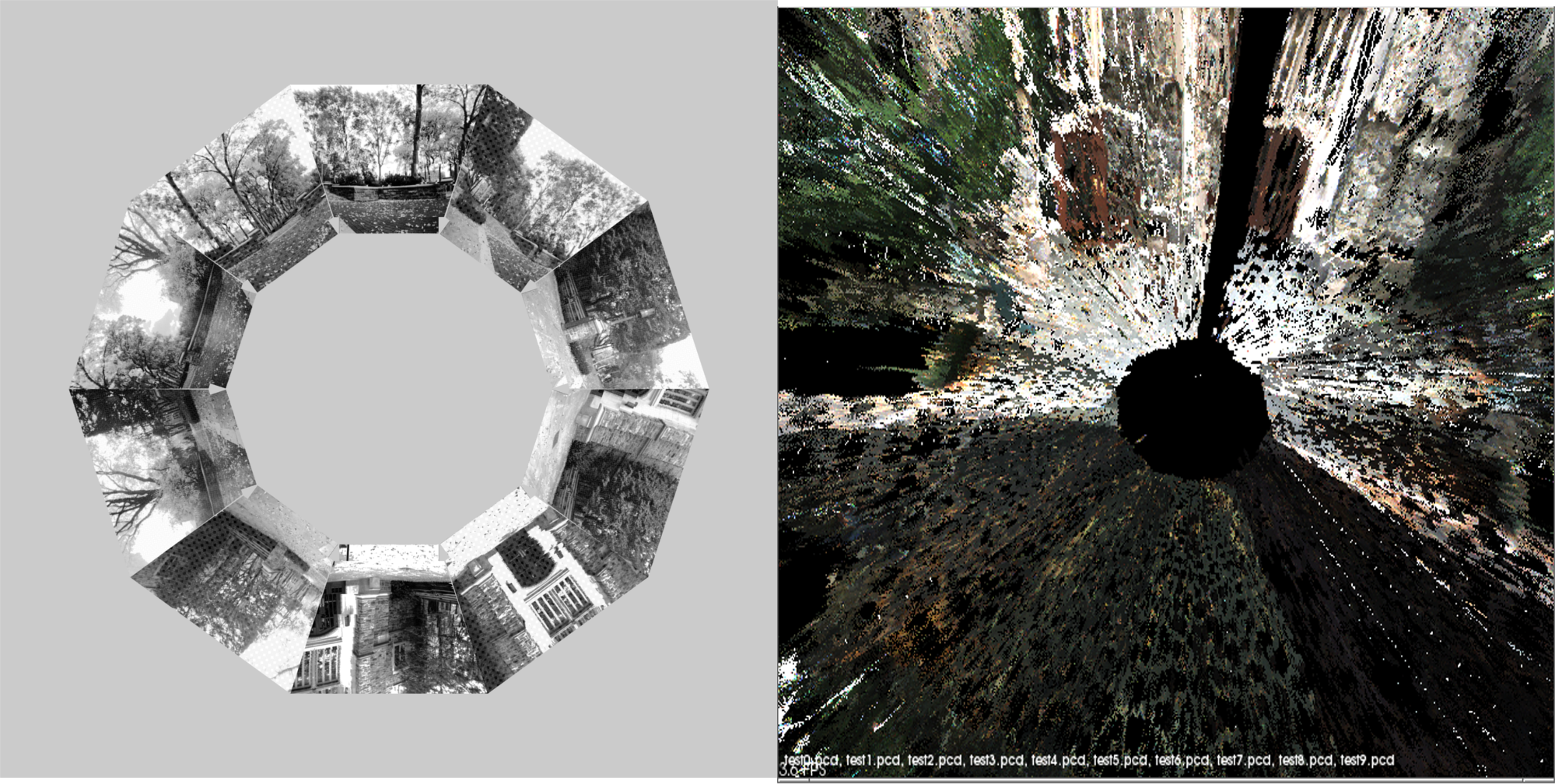

Visual homing is a bioinspired, local navigation technique used to direct a robot to a previously seen location by comparing the image of the original location with the current visual image. Prior work has shown that exploiting depth cues such as image scale or stereo-depth in homing leads to improved homing performance. In this paper, we present a stereovision database methodology for evaluating the performance of visual homing. We have collected six stereovision homing databases, three indoor and three outdoor. Results are presented for these databases to evaluate the effect of FOV on the performance of the Homing with Stereovision (HSV) algorithm. Based on over 350,000 homing trials, we show that, contrary to intuition, a panoramic field of view does not necessarily lead to the best performance and we discuss why this may be the case.Overview of the Data Repository

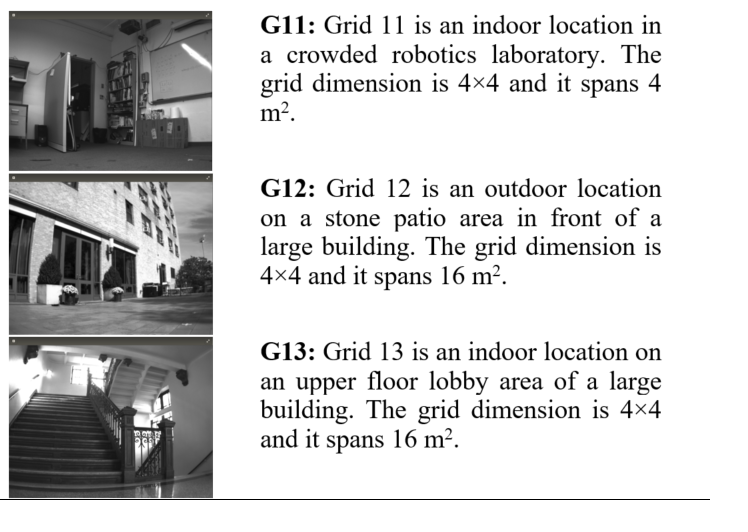

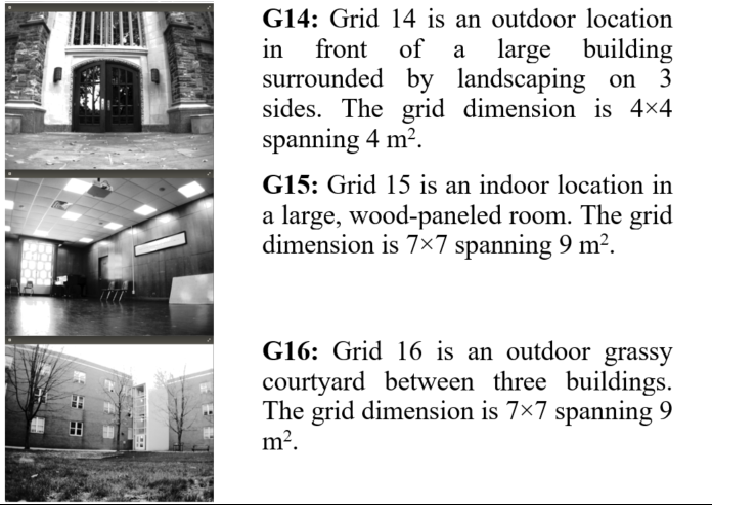

In this web page we report on six stereovision visual homing database inspired by the panoramic image visual homing databases of Möller and others. Using these, we have conducted an evaluation of the effect of FOV on the performance of Nirmal & Lyons's HSV homing algorithm for a variety of visual homing missions in both indoor and outdoor situations totaling over 350,000 visual homing trials. The results indicate that while in general a wide FOV outperforms a narrower FOV, peak performance is not achieved by a panoramic FOV, and we discuss why that may be the case. This web is the data repository for the databases used in the paper. The six databases are briefly described in Table 1 below.

Integrating Stereovision Database into the HSV program

Execution begins as the program recieves coordinates for both the current and goal locations, along with the orientation of the robot in each of these instances. Just prior to this, the user will be tasked with entering the grid number of the database which they would like to use. When entering the coordinates, make sure the second value is negative; this is due to the nature of the robot's axis. COORDINATE FRAME CONVENTIONS: A zero pose of the robot has it at the origin facing +X direction. Thus a +ve angle is CCW and turns towards +ve Y and a -ve angle is CW and turns towards -ve Y. The orientation and location will then be used to determine the proper images to use with the database grid, along with a selection of these images to represent the forward facing one hundred eighty degrees of the robot. These images are then concatenated into two panoramic images, one representing the goal location and one representing the current location, which are examined using sift features to find keypoints which can be compared between the images of the two locations. Once these keypoints are compared and a subset are determined to be valid, motion can be determined by comparing the theta and distance values for each of the matches points. Both of these calculations are performed within vh.cpp and the averages are used to determine the motion of the robot. It is important to note that the distance should be calculated using the Euclidian formula for distance and that a negative value should be reachable. The robot will move a portion of the way so that compensation can be performed in the instance of an incorrect turn or move, which will occasionally occur during execution. Attention should be paid to the scale and gain values within main.cpp which will have a significant affect on the program; generally, the scale should be 1000 and the gain values should be between 0.3-0.6, but no higher than 1.0. In addition, ample time and steps must be provided because the robot may still reach its location despite taking a great deal of time to do so.Repository Information

1. Files below labelled GRIDXX_grids contain visual image folders, while those labelled GRIDXX_cleanDump contain stereo depth data. 2. Histogram smoothing was performed on the visual images of GRID14, an outdoor grid, in order to compensate for lighting conditions. 3. A statistical filtering program, from the point cloud library, was executed on stereo-depth to clean up some stereo noise data. The parameters of the point cloud statistical filter were a meanK of 50 and a standard deviation threshold of 0.2.Project Figures & Diagrams

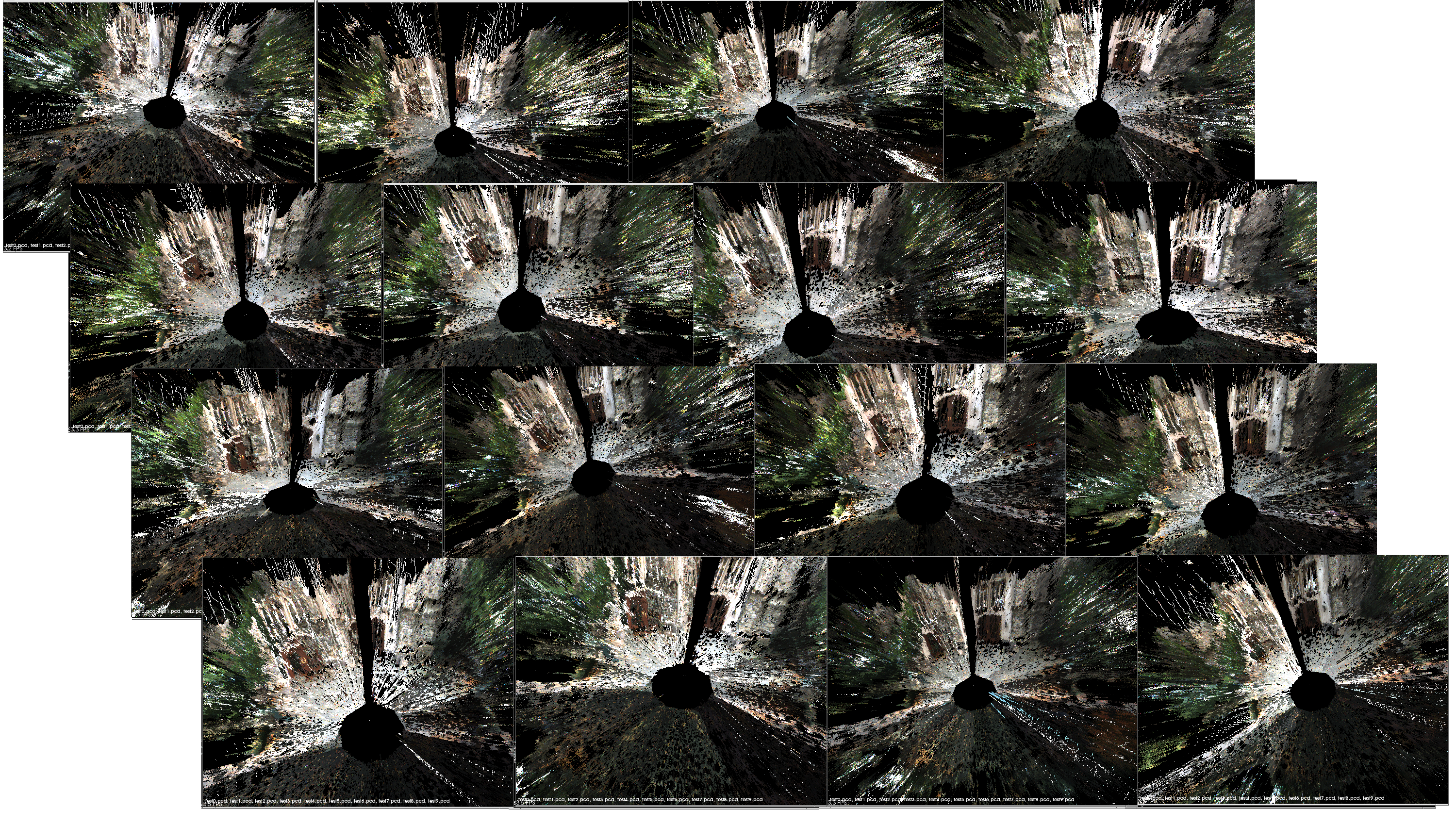

- Depth Figures for HSVD Conference Paper: GRID 14

- Image Figures for HSVD Conference Paper: GRID 14

Datasets

* GRID13_grids.tar.gz: Grid folder for HSVD project. 10/27/16* GRID13_cleanDump.tar.gz: Clean Data Dump folder for HSVD project. 11/7/16

* GRID12_cleanDump.tar.gz: Clean Data Dump folder for HSVD project. 11/7/16

* GRID11_cleanDump.tar.gz: Clean Data Dump folder for HSVD project. 11/7/16

* GRID14_cleanDump.tar.gz: Clean Data Dump folder for HSVD project. 11/7/16

* GRID14_grids.tar.gz: Grid folder for HSVD project post smoothing. 12/5/16

* GRID12_grids.tar.gz: Grid folder for HSVD project post smoothing. 12/5/16

* GRID11_grids.tar.gz: Grid folder for HSVD project. 2/23/17

This data is provided for general use without any warranty or support. Please send any email questions to dlyons@fordham.edu, bbarriage@fordham.edu, and ldelsignore@fordham.edu.

Permissions

* Persons/group who can change the page:<br /> * Set ALLOWTOPICCHANGE = FRCVRoboticsGroup -- (c) Fordham University Robotics and Computer Vision

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

Six_Database_part_1.bmp | r1 | manage | 1165.9 K | 2017-05-31 - 16:55 | DamianLyons | |

| |

Six_Database_part_2.bmp | r1 | manage | 1165.9 K | 2017-05-31 - 16:56 | DamianLyons |

Topic revision: r2 - 2017-05-31 - DamianLyons

Ideas, requests, problems regarding TWiki? Send feedback