Difference: FRCVPublicProject (1 vs. 15)

Revision 152024-08-14 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

| Changed: | ||||||||

| < < | Overview of Research Projectsin Progress at the FRCV Lab | |||||||

| > > | Overview of Recent and Current Research Projects in the FRCV Lab | |||||||

Robotics for small and family farmsBy 2050, the global population is expected to reach 9.7 billion, challenging food production to keep pace. Agricultural robotics can help by addressing labor shortages, reducing costs, and promoting sustainable practices. Large agribusiness, monoculture farming facilitates robotic integration, however this farming method suffers from pest outbreaks and promotes soil depletion. Alternatively, robotics can support small and family farms, diversify agriculture, and enable competition with large agribusinesses. We will focus on a specific but significant requirement for this kind of application: the flexible and robust wide-area navigation required to handle the navigation of robots for livestock management despite the changing visual appearance of the landscape due to weather and growth of vegetation and crops (white paper with more motivating details whiteppr.pdf). | ||||||||

| Line: 49 to 49 | ||||||||

| The quality of the improvement that our method makes is proportional to the divergence information that it has to work with. An avenue of future study is development of control strategies that improve divergence information by exploring more of the state space during deployment. | ||||||||

| Changed: | ||||||||

| < < | For more information: https://research.library.fordham.edu/frcv_videos/2 | |||||||

| > > | For more information: https://research.library.fordham.edu/frcv_videos/2 | |||||||

Using Air Disturbance Detection for Obstacle Avoidance in Drones | ||||||||

Revision 142024-08-13 - DamianLyons

| Line: 1 to 1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

Overview of Research Projectsin Progress at the FRCV LabRobotics for small and family farms | |||||||||

| Changed: | |||||||||

| < < | By 2050, the global population is expected to reach 9.7 billion, challenging food production to keep pace. Agricultural robotics can help by addressing labor shortages, reducing costs, and promoting sustainable practices. Large agribusiness, monoculture farming facilitates robotic integration, however this farming method suffers from pest outbreaks and promotes soil depletion. Alternatively, robotics can support small and family farms, diversify agriculture, and enable competition with large agribusinesses. We will focus on a specific but significant requirement for this kind of application: the flexible and robust wide-area navigation required to handle the navigation of robots for livestock management despite the changing visual appearance of the landscape due to weather and growth of vegetation and crops (white paper with more motivating details whiteppr.pdf). | ||||||||

| > > | By 2050, the global population is expected to reach 9.7 billion, challenging food production to keep pace. Agricultural robotics can help by addressing labor shortages, reducing costs, and promoting sustainable practices. Large agribusiness, monoculture farming facilitates robotic integration, however this farming method suffers from pest outbreaks and promotes soil depletion. Alternatively, robotics can support small and family farms, diversify agriculture, and enable competition with large agribusinesses. We will focus on a specific but significant requirement for this kind of application: the flexible and robust wide-area navigation required to handle the navigation of robots for livestock management despite the changing visual appearance of the landscape due to weather and growth of vegetation and crops (white paper with more motivating details whiteppr.pdf). | ||||||||

| Consider a herding robot teamed with a drone to locate and retrieve livestock strayed from their herd. Herding activity covers a potentially large and dynamically changing area. Large area mapping is not an effective solution to this problem: it requires additional exploration which diverts the robots from herding; Dynamic obstacles like new rainstorm debris could in any case block previously mapped paths; It can be expected that GPS coverage will be limited such as in forested terrain, complicating map merging; Finally, at the mapped destination, the robot must still find the animals which may have moved. | |||||||||

| Line: 25 to 25 | |||||||||

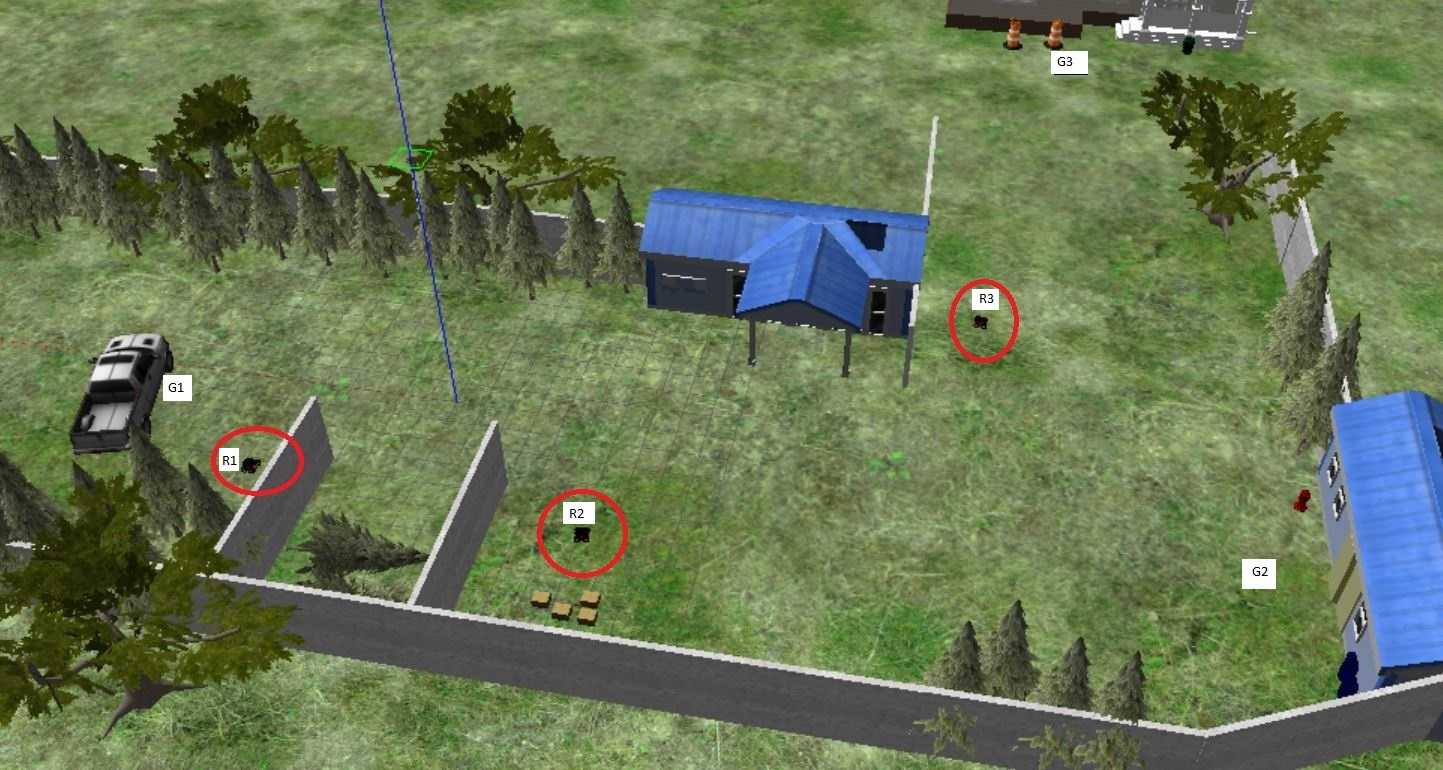

We have conducted WAVN experiments using grid-simulations, ROS/Gazebo simulations and lab experiments.using Turtlebot3 and Pioneer robots. The image above shows a Gazebo simulation of 3 pioneer robots in a small outdoor work area with plenty of out of view targets to navigate to. Our next step is to use WAVN for outdoor navigation to campus locations using a team of Pioneer robots. | |||||||||

| Added: | |||||||||

| > > | A Monte Carlo Framework for Incremental Improvement of Simulation FidelityD.M. Lyons (Fordham) and J. Finocchiaro, M. Novitzky and C. Korpela (United States Military Academy, West Point NY) Simulation tools are widely used in robot program development, whether the program/controller is built by hand or using machine learning. At the very least, simulation allows a robot programmer to eliminate obvious program flaws. The availability of physics engines has produced simulations that can more accurately model physical behavior, making it more attractive to use simulation in conjunction with machine learning techniques to develop robot programs. However, a robot program validated with simulation, when operating in a real, unstructured environment may come across phenomena that its designers just did not know to include in the simulation, even though the phenomenon could in fact be simulated if it were known a-priori to be relevant. Examples of this kind of simulation `reality gap' include inaccurate robot joint parameters, surface friction, object masses, sizes and locations. This paper addresses closing the reality gap for simulations used to develop robot programs by combining sim-to-real and real-to-sim approaches. We will assume that the simulation is a black-box and we present a framework to coerce simulation behavior to more closely resemble real experience. The simulation has configuration parameters $\phi$. If deployment of the robot program ($\pi$) results in failure, as determined by a performance monitor, then our overall objective is for $\phi$ to be updated by the deployment experience and $\pi$ redeveloped to handle that experience. We expect this virtuous cycle of simulation, deployment and improvement to iterate. The simulation is modeled as a transition function $T_{\phi}(s' | s , a)$ where $s$ is the current sensor data from the simulation, $a$ is the action to be carried out, $s'$ is the resulting sensor data after the action is taken, and $\phi$ is a setting of the configuration parameters for the simulation. Real world experience will also be modeled as a transition function $T_r(s' | s , a)$. Two important novel aspects of our work are 1) a domain independent proposal for $\phi$ (as opposed to the more domain specific examples of domain randomization) and 2) a Monte Carlo method to modify $\phi$ based on a direct comparison of estimated transitions functions (as opposed to the comparison of observations). The approach collects simulation and real world observations and builds conditional probability functions for them. These are used to generate paired roll-outs and to look for points of divergence in behavior. The divergences are employed to generate state-space kernels coercing the simulation into behaving more like observed reality within a region of the state space. The method handles situations in which the environment behaves different than expected (motor divergence) and sensors behave differently than expected (sensor divergence). The method was evaluated in field trials using ROS/Gazebo for simulation and a heavily modified Traaxas platform for outdoor deployment evaluated first in the environment in whichit was designed and secondly in an environment containing an eunexpected ramp that would prevent it from reaching its objectives. Achieving objectives resulted in the system receiving a reward (as in reinforcement learning) and an Average Total Reward (ATR) graph used to capture its performance. Our results support not just that the kernel approach can force the simulation to behave more like reality, but that the modification is such that a robot program with an improved control policy tested in the modified simulation also performs better in the real world.

This approach allowed us to sport immediately when the deployed robot did not behave as in the simulation, and to propose changes to the simulation to model the 'unexpected' phenomena see in deployment. Manual update or reinforcement learning (we used both) can be used to 'fix' program code and test it in the modified simulation. The redeployment to the world showed the fixes were effective.

A crucial point of difference with other similar work is that they address the reality gap problem with a principled domain randomization approach, providing a range of environment for policy development, and generating a policy that is robust along the right dimensions of variability. We address the same problem but from the perspective of making each simulation run more closely resemble reality. This is reflected in how the simulation is ``wrapped'' by each approach: our approach requires a more invasive configuration -- access to the Gazebo model information -- but we argue that our configuration is less application specific and more easily generalized. We are especially interested in generalization to higher-dimensional state-spaces. The quality of the improvement that our method makes is proportional to the divergence information that it has to work with. An avenue of future study is development of control strategies that improve divergence information by exploring more of the state space during deployment.

For more information: https://research.library.fordham.edu/frcv_videos/2 | ||||||||

Using Air Disturbance Detection for Obstacle Avoidance in Drones | |||||||||

| Line: 132 to 157 | |||||||||

| |||||||||

| Added: | |||||||||

| > > |

| ||||||||

Revision 132024-08-09 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projectsin Progress at the FRCV Lab | ||||||||

| Added: | ||||||||

| > > | Robotics for small and family farmsBy 2050, the global population is expected to reach 9.7 billion, challenging food production to keep pace. Agricultural robotics can help by addressing labor shortages, reducing costs, and promoting sustainable practices. Large agribusiness, monoculture farming facilitates robotic integration, however this farming method suffers from pest outbreaks and promotes soil depletion. Alternatively, robotics can support small and family farms, diversify agriculture, and enable competition with large agribusinesses. We will focus on a specific but significant requirement for this kind of application: the flexible and robust wide-area navigation required to handle the navigation of robots for livestock management despite the changing visual appearance of the landscape due to weather and growth of vegetation and crops (white paper with more motivating details whiteppr.pdf). Consider a herding robot teamed with a drone to locate and retrieve livestock strayed from their herd. Herding activity covers a potentially large and dynamically changing area. Large area mapping is not an effective solution to this problem: it requires additional exploration which diverts the robots from herding; Dynamic obstacles like new rainstorm debris could in any case block previously mapped paths; It can be expected that GPS coverage will be limited such as in forested terrain, complicating map merging; Finally, at the mapped destination, the robot must still find the animals which may have moved. With colleagues, Prof. Mohamed Rahouti and CS PhD. student Nasim Paykari, we propose an alternate, groundbreaking approach that transcends the limitations imposed by environmental variability, focusing on map-less, visual coordination between the robot and drone. This methodology further leverages dynamic environmental adaptation and a lightweight blockchain approach to enhance visual landmark recognition and navigation. By prioritizing visual cues and landmarks over purely map-based methods, our strategy facilitates robust, flexible navigation across changing agricultural landscapes. This approach not only addresses the immediate challenges of precision in location and task execution but also sets a new standard for the deployment of robotics in agriculture, particularly benefiting small and family farms by enabling them to maintain competitiveness and sustainability in the face of large agribusiness. | |||||||

Wide Area Visual Navigation (WAVN)We are investigating a novel approach to navigation for a heterogenous robot team operating for long durations in an environment where there is long and short term visual changes. Small-scale precision agriculture is one of our main applications: High precision, high reliability GPS can be an entrance barrier for small family farms. A successful solution to this challenge would open the way to revolutionizing small farming to compete with big agribusiness. The challenge is enormous however. A family farm operating in a remote location, experiencing all the changes in terrain appearance and navigability that comes with seasonal weather changes and dramatic weather events. Our work is a step in this direction. | ||||||||

| Line: 124 to 131 | ||||||||

| ||||||||

| Added: | ||||||||

| > > |

| |||||||

Revision 122022-12-31 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projectsin Progress at the FRCV Lab | ||||||||

| Added: | ||||||||

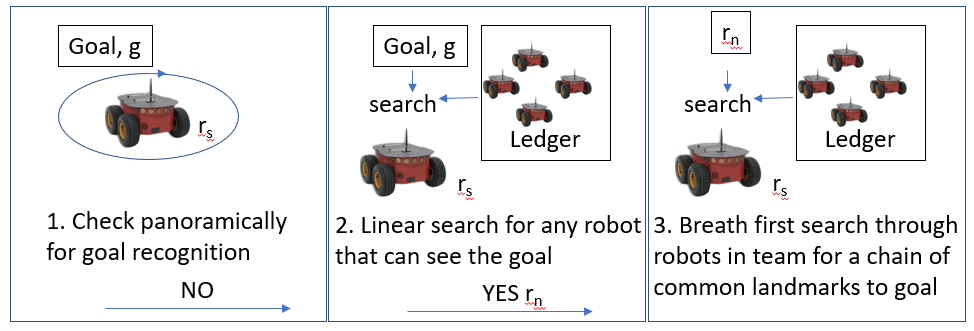

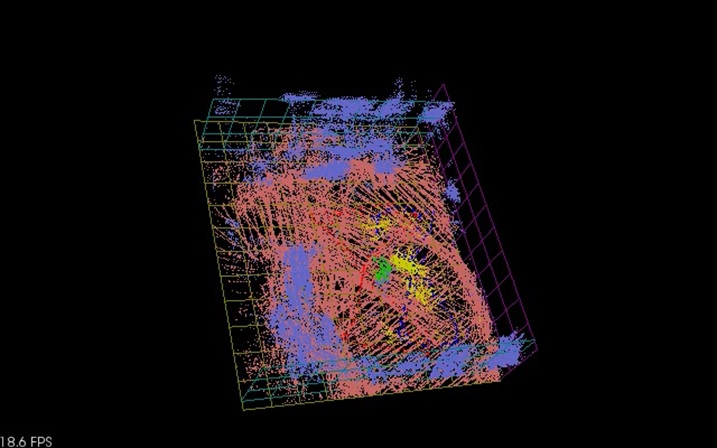

| > > | Wide Area Visual Navigation (WAVN)We are investigating a novel approach to navigation for a heterogenous robot team operating for long durations in an environment where there is long and short term visual changes. Small-scale precision agriculture is one of our main applications: High precision, high reliability GPS can be an entrance barrier for small family farms. A successful solution to this challenge would open the way to revolutionizing small farming to compete with big agribusiness. The challenge is enormous however. A family farm operating in a remote location, experiencing all the changes in terrain appearance and navigability that comes with seasonal weather changes and dramatic weather events. Our work is a step in this direction. Our approach is based on visual homing, which is a simple and lightweight method for a robot to navigate to a visually definied target that is in its current field of view. We extend this approach to work with targets that are beyond the current field of view by leveraging visual information from all camera assets in the entire team (and potentially fixed camera assets on buildings or other available camera assets). To ensure efficient and secure distributed communication between the team, we employ distributed blockchain communication: Team members regularly upload their visual panorama to the blockchain, which is then available to all team members in a safe and secure fashion. When a robot needs to navigate to a distant target, it queries the visual information from the rest of the team, and establishes a set of intermediate visual targets it can sequentially navigate to using homing, ending with the final target.

For a short video introduction, see here. We are also investigating the synergy of blockchain and navigation methodologies to show that blockhain can be used to simplify navigation in addition to providing a distributed and secure communication channel. Key to the WAVN navigation approach is establishing an intermediate sequence of landmarks by looking for a chain of common landmarks between pairs of robots. We have investigated different ways in which robots can identify whether they are seeing the same landmark and we show that using a CNN-bsed YOLO to segement a scene into common objects followed by feature matching to identify whether objects are the same outperforms featrure matching on its own. Furthermore using a group of objects as a landmark outperforms a single object landmark. We are currently investigating whether object diversity in a group improves this even further.

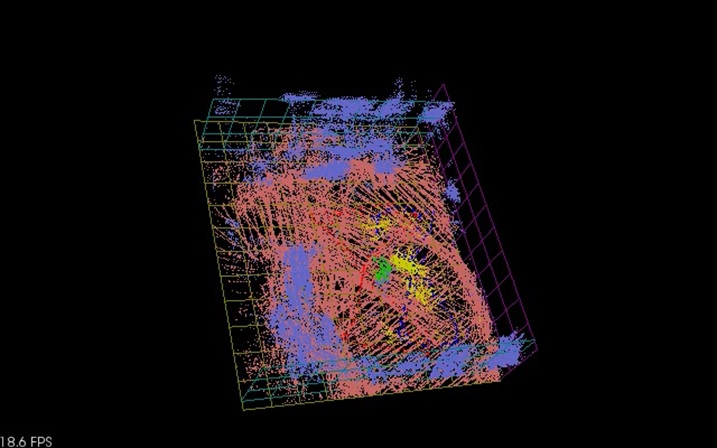

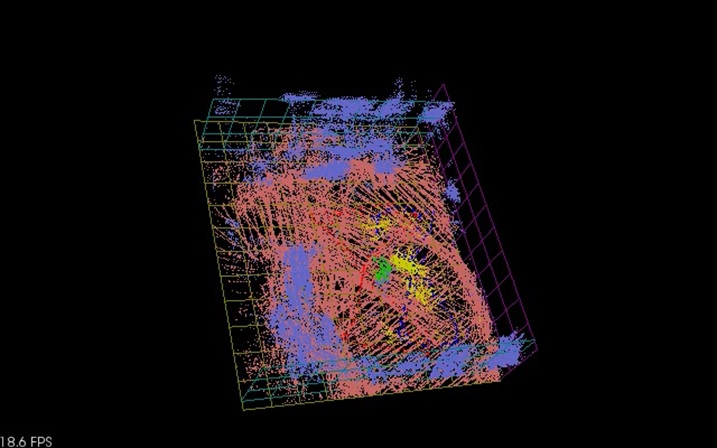

We have conducted WAVN experiments using grid-simulations, ROS/Gazebo simulations and lab experiments.using Turtlebot3 and Pioneer robots. The image above shows a Gazebo simulation of 3 pioneer robots in a small outdoor work area with plenty of out of view targets to navigate to. Our next step is to use WAVN for outdoor navigation to campus locations using a team of Pioneer robots. | |||||||

Using Air Disturbance Detection for Obstacle Avoidance in DronesThe use of unmanned aerial vehicles (drones) is expanding to commercial, scientific, and agriculture applications, such as surveillance, product deliveries and aerial photography etc. One challenge for applications of drones is detecting obstacles and avoiding collisions. Especially small drones in proximity to people need to detect people around them and avoid injuring those people. A typical solution to this issue is the use of camera sensor, ultrasonic sensor for obstacle detection or sometimes just manual control (teleoperation). However, these solutions have costs in battery lifetime, payload, operator skill. Due to their diminished ability to support any payload, it is difficult to put extra stuff on small drones. Fortunately, most drones are equipped with an inertial measurement unit (IMU). | ||||||||

| Line: 9 to 27 | ||||||||

| We choose a small drone, the Crazyflie 2.0, as the experiment tool. The Crazyflie 2.0 is a lightweight, open source flying development platform based on a micro quadcopter. It has several built-in sensors including gyroscope, accelerometer etc. ROS (Robot Operating System) is a set of software libraries and tools for modular robot applications. The point of ROS is to create a robotics standard. ROS has great simulation tools such as Rviz and Gazebo to help us to run the simulation before conducting real experiments on drones. Currently there is little Crazyflie support in ROS, 4 however, we wish to use ROS to conduct our experimentation because it has become a de facto standard. More details here | ||||||||

| Changed: | ||||||||

| < < | Multilingual Static Analysis (MLSA) | |||||||

| > > | Multilingual Software Analysis (MLSA) | |||||||

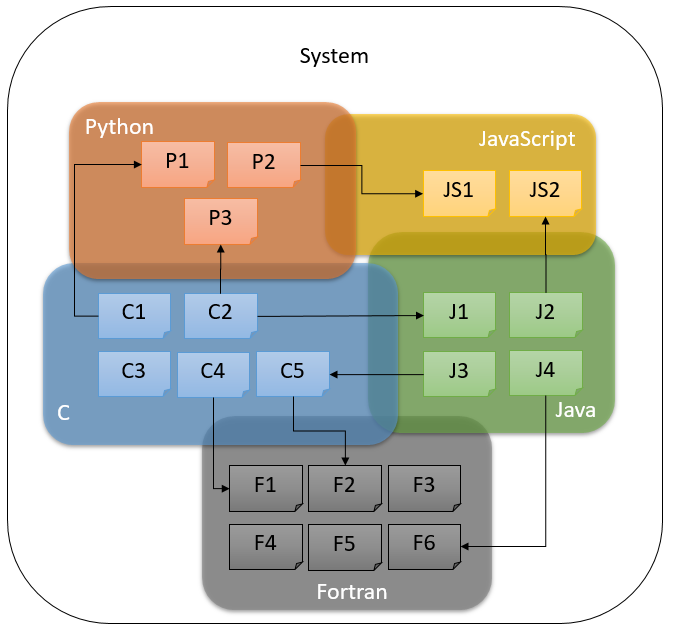

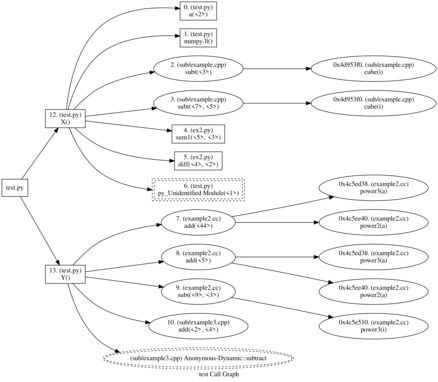

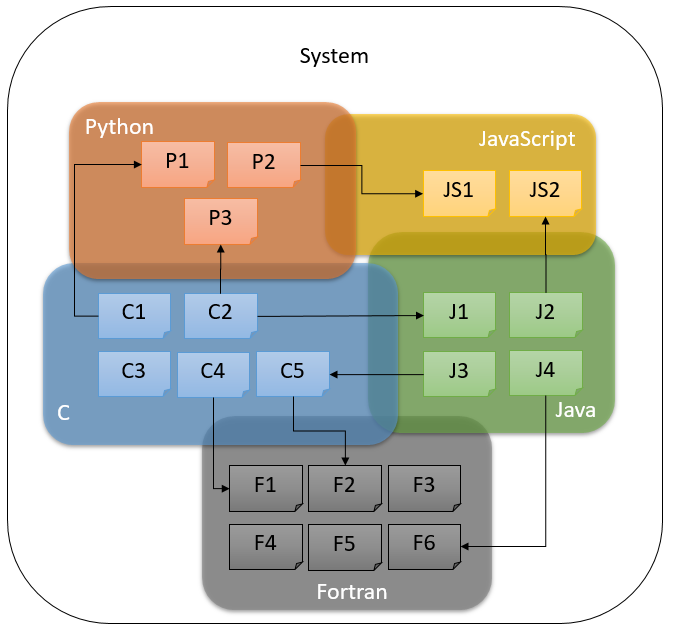

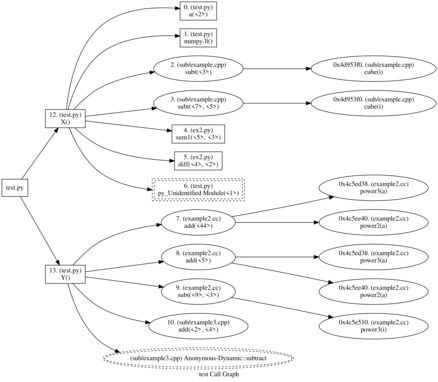

Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | ||||||||

| Line: 103 to 121 | ||||||||

| ||||||||

| Added: | ||||||||

| > > |

| |||||||

Revision 112019-09-18 - LabTech

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

| Changed: | ||||||||

| < < | Overview of Research Projectsin Progress at the FRCV Lab | |||||||

| > > | Overview of Research Projectsin Progress at the FRCV LabUsing Air Disturbance Detection for Obstacle Avoidance in DronesThe use of unmanned aerial vehicles (drones) is expanding to commercial, scientific, and agriculture applications, such as surveillance, product deliveries and aerial photography etc. One challenge for applications of drones is detecting obstacles and avoiding collisions. Especially small drones in proximity to people need to detect people around them and avoid injuring those people. A typical solution to this issue is the use of camera sensor, ultrasonic sensor for obstacle detection or sometimes just manual control (teleoperation). However, these solutions have costs in battery lifetime, payload, operator skill. Due to their diminished ability to support any payload, it is difficult to put extra stuff on small drones. Fortunately, most drones are equipped with an inertial measurement unit (IMU). The IMU can tell us the drone’s attitude and accelerations from the gyroscope and accelerometer. We note that there will be air disturbance in the vicinity of the drone when it’s moving close to obstacles or other drones. The data from the gyroscope and accelerometer will change to reflect this. Our objective is to detect obstacles from the aforementioned air disturbance by analyzing the data from the gyroscope and accelerometer. Air disturbance can be produced by many reasons such as ground effect, drones in proximity to people or wind gust from other sides. These situations can occur at the same time to make things more complicated. To make the experiment simpler, we just detect air disturbance produced from flying close to or underneath an overhead drone. We choose a small drone, the Crazyflie 2.0, as the experiment tool. The Crazyflie 2.0 is a lightweight, open source flying development platform based on a micro quadcopter. It has several built-in sensors including gyroscope, accelerometer etc. ROS (Robot Operating System) is a set of software libraries and tools for modular robot applications. The point of ROS is to create a robotics standard. ROS has great simulation tools such as Rviz and Gazebo to help us to run the simulation before conducting real experiments on drones. Currently there is little Crazyflie support in ROS, 4 however, we wish to use ROS to conduct our experimentation because it has become a de facto standard. More details here | |||||||

Multilingual Static Analysis (MLSA)Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | ||||||||

| Changed: | ||||||||

| < < |    | |||||||

| > > |    | |||||||

TOAD Tracking: Automating Behavioral Research of the Kihansi Spray Toad

| ||||||||

| Line: 45 to 53 | ||||||||

Drone project : Crazyflie | ||||||||

| Added: | ||||||||

| > > | Drones are an exciting kind of robot that has recently found their way into commercial mainstream robotics. The most recent high-profile example of this is their appearance in the opening ceremonies of the 2018 Winter Olympics in Pyeong Chang, South Korea. Due to their epic appearance, the drones received high acclaim from the audience, solidifying the idea of using drones as performers in a public arena, capable of carrying out emotion-filled acts.

Beyond this example, our vision is to utilize drones, more specifically drone swarms, to not only perform theatrical performances, but also operate as a collective entity that can communicate and interact meaningfully with ordinary people in daily life activities. Our thesis is that drone swarms can more effectively impart emotive communication than solo drones. For instance, drone swarms can play the role of a tour guide at attractions or museums, to bring tourists on a trip through the most notable points at the site. In emergency situations that require evacuation of large crowds, drone swarms can help guide and coordinate the movement of survivors towards safe areas, as well as signaling first responders towards areas where help is needed the most. Advantages of Drone SwarmsThe main advantages of drone swarms over solo drones are the added dimensions of freedom. More specifically, with multiple drones, we can

Motivating ApplicationsEquipped with the ability to impart emotive messages, drone swarms could be used for crowd control and guidance, e.g.,

Technical ChallengesIn order to construct drone swarms that can communicate, interact with, and operate within the public space, we believe that the following technical challenges need to be addressed. | |||||||

| This project page is here

Older Projects | ||||||||

Revision 102019-05-29 - LabTech

| Line: 1 to 1 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

Overview of Research Projectsin Progress at the FRCV Lab | |||||||||||

| Added: | |||||||||||

| > > | Multilingual Static Analysis (MLSA)Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here.

| ||||||||||

TOAD Tracking: Automating Behavioral Research of the Kihansi Spray Toad

| |||||||||||

| Line: 17 to 23 | |||||||||||

| |||||||||||

| Deleted: | |||||||||||

| < < | Multilingual Static Analysis (MLSA)Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | ||||||||||

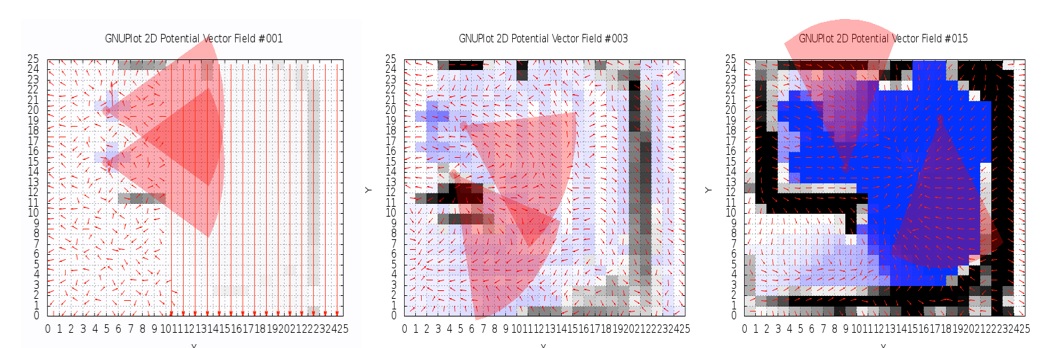

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm. In this work we propose an approach, the Space-Based Potential Field (SBPF) approach, to controlling multiple robots for area exploration missions that focus on robot dispersion. The SBPF method is based on a potential field approach that leverages knowledge of the overall bounds of the area to be explored. This additional information allows a simpler potential field control strategy for all robots but which nonetheless has good dispersion and overlap performance in all the multi-robot scenarios while avoiding potential minima. Both simulation and robot experimental results are presented as evidence. | |||||||||||

| Line: 69 to 70 | |||||||||||

| |||||||||||

| Added: | |||||||||||

| > > |

| ||||||||||

Revision 92019-05-08 - LabTech

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projectsin Progress at the FRCV LabTOAD Tracking: Automating Behavioral Research of the Kihansi Spray Toad | ||||||||

| Line: 18 to 18 | ||||||||

Multilingual Static Analysis (MLSA) | ||||||||

| Added: | ||||||||

| > > | Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. | |||||||

| Changed: | ||||||||

| < < | Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | |||||||

| > > | Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | |||||||

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm | ||||||||

Revision 82019-01-17 - PhilipBal

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab | ||||||||

| Changed: | ||||||||

| < < | TOAD Tracking: Behavioral Research of the Kihinasi Spray Toad | |||||||

| > > | TOAD Tracking: Automating Behavioral Research of the Kihansi Spray Toad | |||||||

| Added: | ||||||||

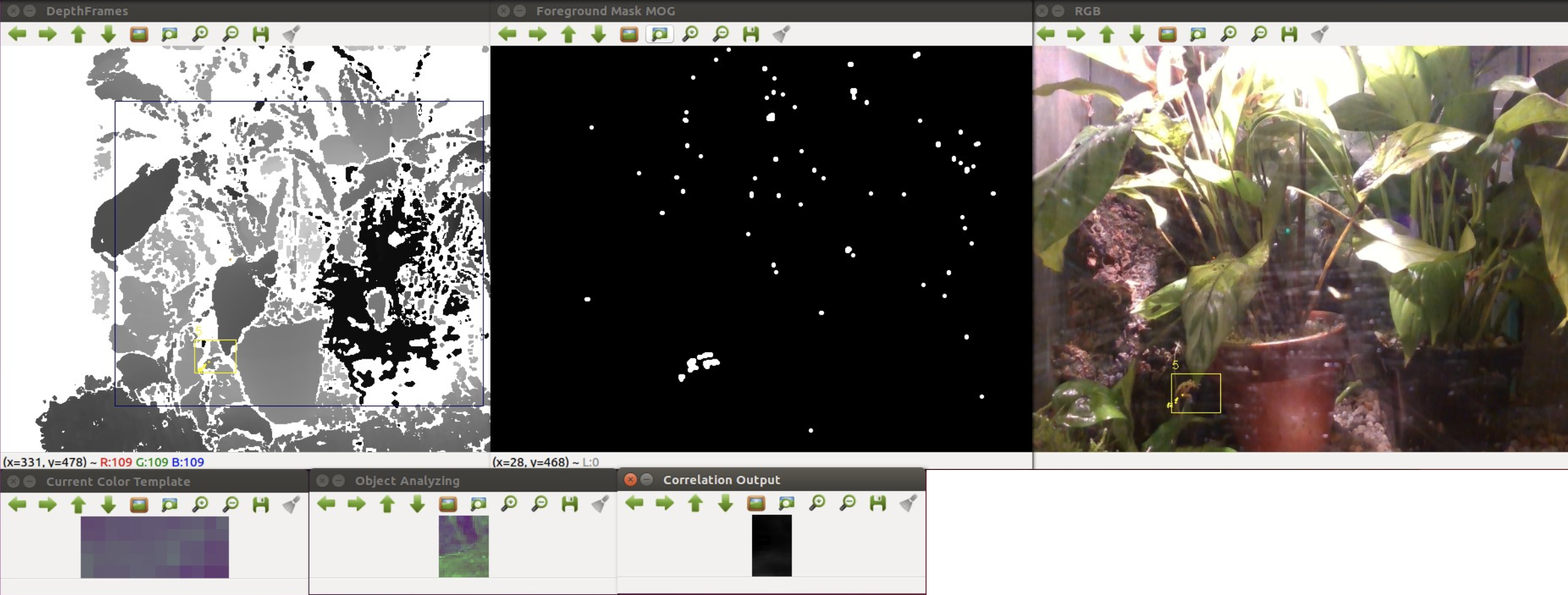

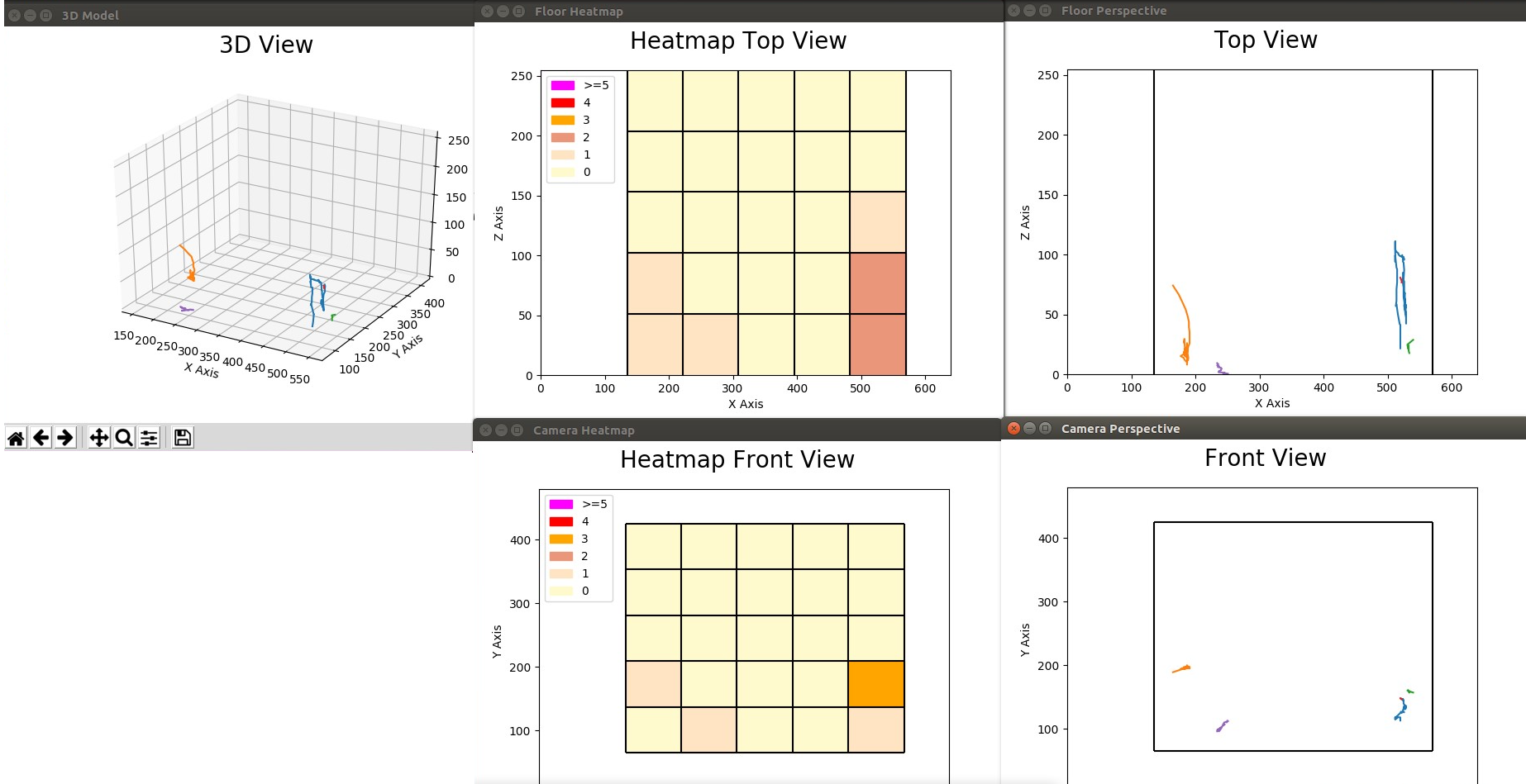

| > > | The Kihansi Spray Toad, officially classified as ‘extinct in the wild’, is being bred by the Bronx Zoo in an effort to reintroduce the species back into the wild. With thousands of toads already bred in captivity, an opportunity to learn about the toads behavior presents itself for the first time ever, at scale. In order to accurately and efficiently gain information about the toads behavior, we present an automated tracking system that is based on the Intel Real Sense SR300 Camera. As the average size of the toad is less than 1 inch, existing tracking systems prove ineffective. Thus, we developed a tracking system using a combination of depth tracking and color correlation to identify and track individual toads. Depth and color video sequences are produced from the SR300 camera. Depth video sequences, in grayscale, are derived from an infrared sensor and sense any motion that may occur, hence detecting moving toads. Color video sequences, in RGB, allow for color correlation while tracking targets. A template of the color of a toad is taken manually, once, as a universal example of what the color of a toad should be. This is then compared against potential targets every frame to increase the confidence a toad has been detected versus, for example, a leaf moving. The program detects and tracks toads from frame to frame, and produces a set of tracks in 2 and 3 dimensions, as well as 2 dimensional heat maps. For further details click here.

| |||||||

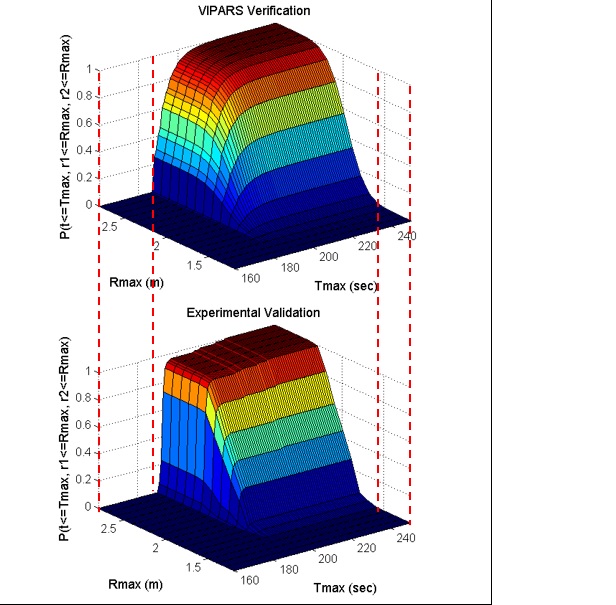

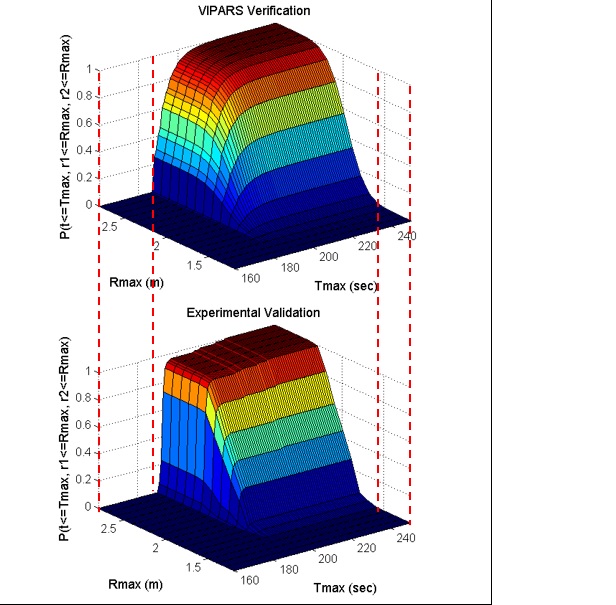

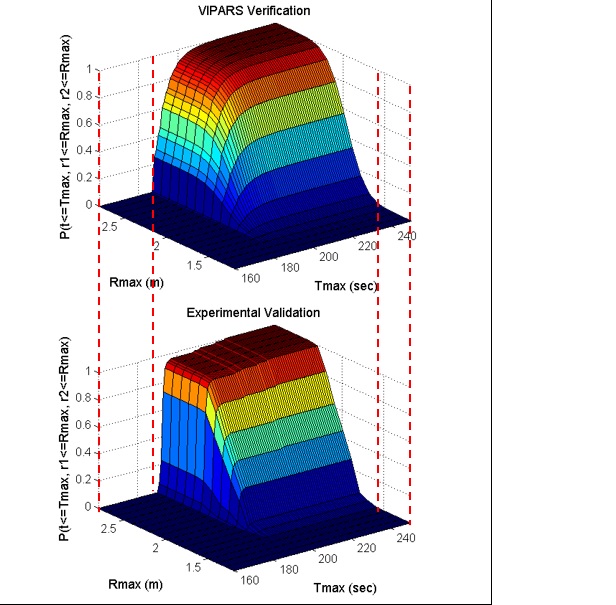

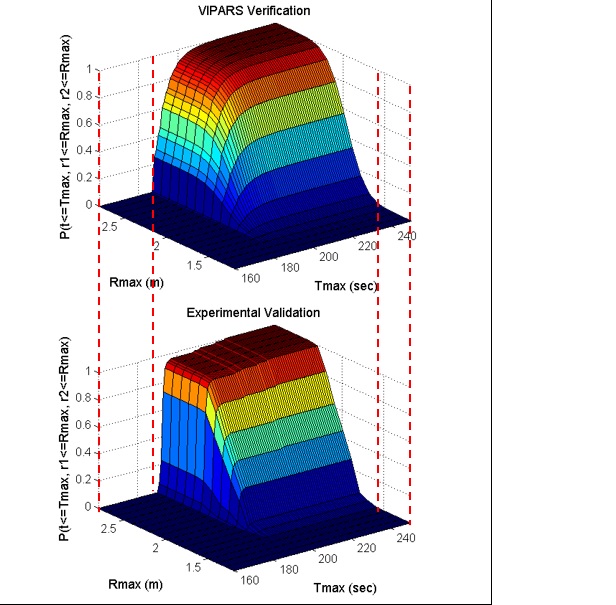

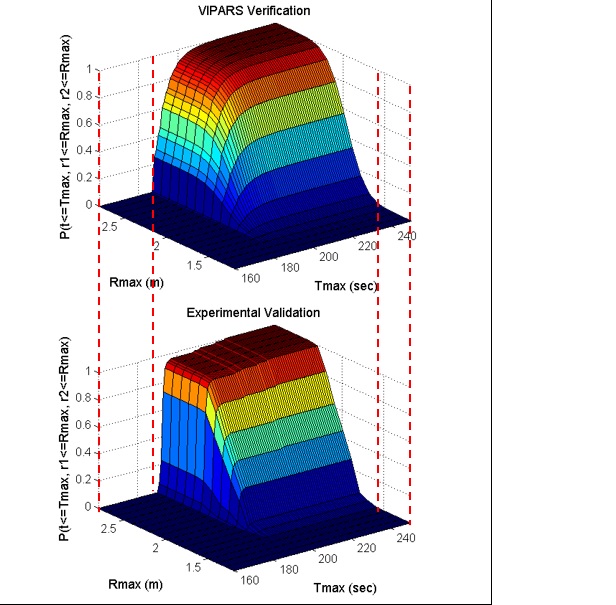

Getting it right the first time! Establishing performance guarantees for C-WMD autonomous robot missionsIn research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. We have developed a novel static analysis approach to analysing behavior based programs, coupled with a Bayesian network approach to predicting performance. Comparing predicted results to extensive empirical validation conducted at GATech's mobile robots lab, we have shown we can verify/predict reasltic performance for waypoint missions, multiple robot missions, missions with uncertain obstacles, missions including localization software. We are currently working on human-in-the-loop systems. | ||||||||

| Line: 29 to 37 | ||||||||

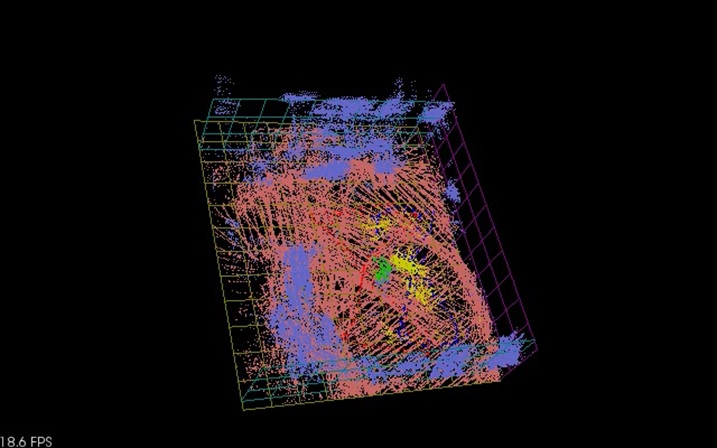

Ghosthunters! Filtering mutual sensor interference in closely working robot teams | ||||||||

| Changed: | ||||||||

| < < | We address the problem of fusing laser ranging data from multiple mobile robots that are surveying an area as part of a robot search and rescue or area surveillance mission. We are specifically interested in the case where members of the robot team are working in close proximity to each other. The advantage of this teamwork is that it greatly speeds up the surveying process; the area can be quickly covered even when the robots use a random motion exploration approach. However, the disadvantage of the close proximity is that it is possible, and even likely, that the laser ranging data from one robot include many depth readings caused by another robot. We refer to this as mutual interference. Using a team of two Pioneer 3-AT robots with tilted SICK LMS-200 laser sensors, we evaluate several techniques for fusing the laser ranging information so as to eliminate the mutual interference. There is an extensive literature on the mapping and localization aspect of this problem. Recent work on mapping has begun to address dynamic or transient objects. Our problem differs from the dynamic map problem in that we look at one kind of transient map feature, other robots, and we know that we wish to completely eliminate the feature. We present and evaluate three different approaches to the map fusion problem: a robot-centric approach, based on estimating team member locations; a map-centric approach, based on inspecting local regions of the map, and a combination of both approaches. We show results for these approaches for several experiments for a two robot team operating in a confined indoor environment . | |||||||

| > > | We address the problem of fusing laser ranging data from multiple mobile robots that are surveying an area as part of a robot search and rescue or area surveillance mission. We are specifically interested in the case where members of the robot team are working in close proximity to each other. The advantage of this teamwork is that it greatly speeds up the surveying process; the area can be quickly covered even when the robots use a random motion exploration approach. However, the disadvantage of the close proximity is that it is possible, and even likely, that the laser ranging data from one robot include many depth readings caused by another robot. We refer to this as mutual interference. Using a team of two Pioneer 3-AT robots with tilted SICK LMS-200 laser sensors, we evaluate several techniques for fusing the laser ranging information so as to eliminate the mutual interference. There is an extensive literature on the mapping and localization aspect of this problem. Recent work on mapping has begun to address dynamic or transient objects. Our problem differs from the dynamic map problem in that we look at one kind of transient map feature, other robots, and we know that we wish to completely eliminate the feature. We present and evaluate three different approaches to the map fusion problem: a robot-centric approach, based on estimating team member locations; a map-centric approach, based on inspecting local regions of the map, and a combination of both approaches. We show results for these approaches for several experiments for a two robot team operating in a confined indoor environment . | |||||||

| ||||||||

| Line: 52 to 58 | ||||||||

| ||||||||

| Changed: | ||||||||

| < < | <meta name="robots" content="noindex" /> | |||||||

| > > | <meta name="robots" content="noindex" /> | |||||||

| -- (c) Fordham University Robotics and Computer Vision | ||||||||

| Line: 60 to 66 | ||||||||

| ||||||||

| Added: | ||||||||

| > > |

| |||||||

Revision 72019-01-17 - PhilipBal

Revision 62017-11-15 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab | ||||||||

| Line: 34 to 34 | ||||||||

| ||||||||

| Added: | ||||||||

| > > | Drone project : CrazyflieThis project page is here | |||||||

Older ProjectsThis includes the following projects that are temporarily on hiatus: | ||||||||

Revision 52017-07-12 - AnneMarieBogar

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab | ||||||||

| Line: 6 to 6 | ||||||||

| In research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. We have developed a novel static analysis approach to analysing behavior based programs, coupled with a Bayesian network approach to predicting performance. Comparing predicted results to extensive empirical validation conducted at GATech's mobile robots lab, we have shown we can verify/predict reasltic performance for waypoint missions, multiple robot missions, missions with uncertain obstacles, missions including localization software. We are currently working on human-in-the-loop systems. | ||||||||

| Changed: | ||||||||

| < < |  | |||||||

| > > |  | |||||||

| Changed: | ||||||||

| < < | Multilingual Static Analysis (MLSA) | |||||||

| > > | Multilingual Static Analysis (MLSA) | |||||||

| Changed: | ||||||||

| < < | Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | |||||||

| > > | Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | |||||||

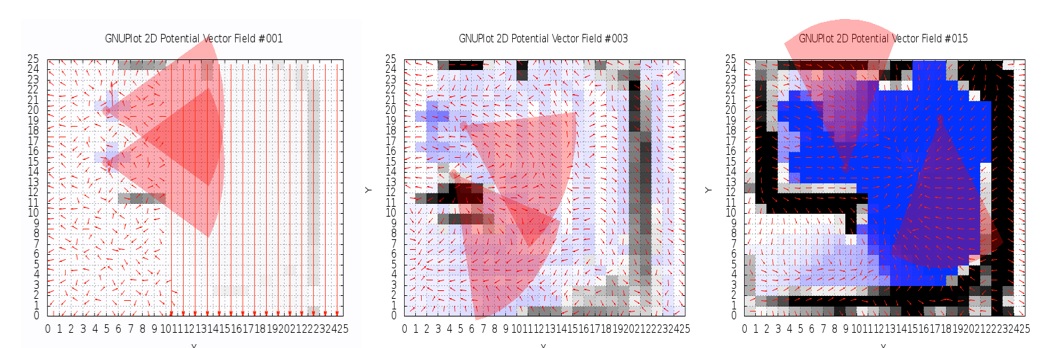

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm. In this work we propose an approach, the Space-Based Potential Field (SBPF) approach, to controlling multiple robots for area exploration missions that focus on robot dispersion. The SBPF method is based on a potential field approach that leverages knowledge of the overall bounds of the area to be explored. This additional information allows a simpler potential field control strategy for all robots but which nonetheless has good dispersion and overlap performance in all the multi-robot scenarios while avoiding potential minima. Both simulation and robot experimental results are presented as evidence. | ||||||||

| Changed: | ||||||||

| < < |  | |||||||

| > > |  | |||||||

Visual Homing with Stereovision | ||||||||

| Line: 24 to 24 | ||||||||

| In current work we have modified the HSV code to use a database of stored stereoimagery and we are conducting extensive testing of the algorithm. | ||||||||

| Changed: | ||||||||

| < < |  | |||||||

| > > |  | |||||||

Ghosthunters! Filtering mutual sensor interference in closely working robot teams | ||||||||

| Line: 32 to 32 | ||||||||

| Using a team of two Pioneer 3-AT robots with tilted SICK LMS-200 laser sensors, we evaluate several techniques for fusing the laser ranging information so as to eliminate the mutual interference. There is an extensive literature on the mapping and localization aspect of this problem. Recent work on mapping has begun to address dynamic or transient objects. Our problem differs from the dynamic map problem in that we look at one kind of transient map feature, other robots, and we know that we wish to completely eliminate the feature. We present and evaluate three different approaches to the map fusion problem: a robot-centric approach, based on estimating team member locations; a map-centric approach, based on inspecting local regions of the map, and a combination of both approaches. We show results for these approaches for several experiments for a two robot team operating in a confined indoor environment . | ||||||||

| Changed: | ||||||||

| < < |  | |||||||

| > > |  | |||||||

Older Projects | ||||||||

| Line: 44 to 44 | ||||||||

| - Cognitive Robotics: ADAPT. Synchronizing real and synthetic imagery. | ||||||||

| Changed: | ||||||||

| < < | ||||||||

| > > |

| |||||||

| ||||||||

Revision 42016-07-26 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab | ||||||||

| Line: 10 to 10 | ||||||||

Multilingual Static Analysis (MLSA) | ||||||||

| Changed: | ||||||||

| < < | Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. | |||||||

| > > | Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | |||||||

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm | ||||||||

Revision 32016-07-25 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab

Getting it right the first time! Establishing performance guarantees for C-WMD autonomous robot missions | ||||||||

| Changed: | ||||||||

| < < | In research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. | |||||||

| > > | In research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. We have developed a novel static analysis approach to analysing behavior based programs, coupled with a Bayesian network approach to predicting performance. Comparing predicted results to extensive empirical validation conducted at GATech's mobile robots lab, we have shown we can verify/predict reasltic performance for waypoint missions, multiple robot missions, missions with uncertain obstacles, missions including localization software. We are currently working on human-in-the-loop systems. | |||||||

| ||||||||

| Added: | ||||||||

| > > | Multilingual Static Analysis (MLSA)Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. | |||||||

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm | ||||||||

| Line: 19 to 22 | ||||||||

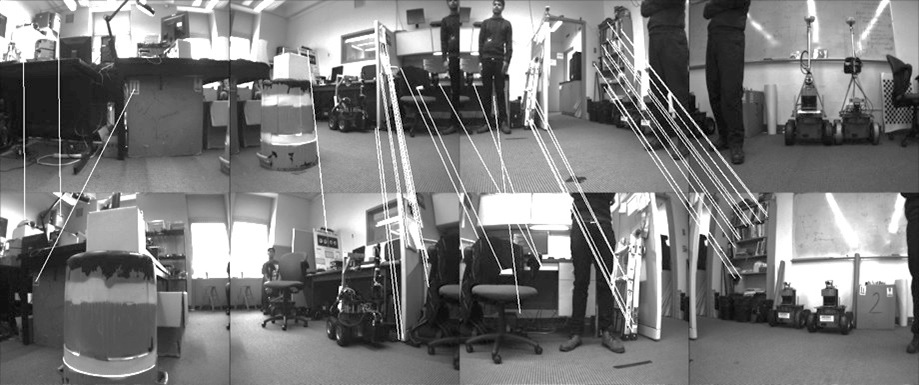

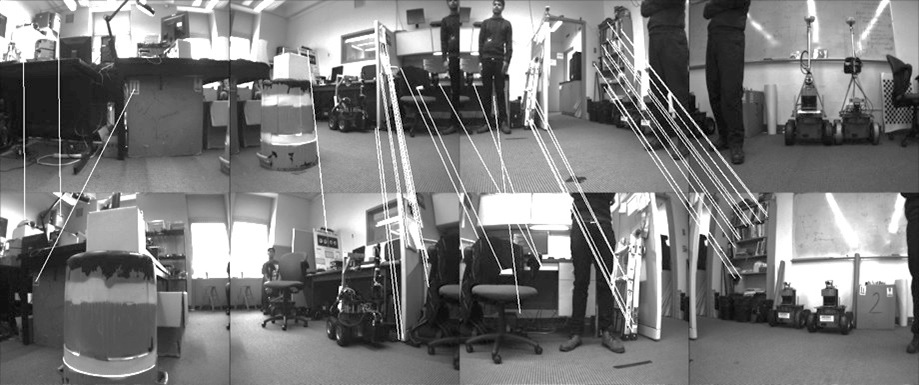

| Visual Homing is a navigation method based on comparing a stored image of a goal location to the current image to determine how to navigate to the goal location. It is theorized that insects such as ants and bees employ visual homing techniques to return to their nest or hive. Visual Homing has been applied to robot platforms using two main approaches: holistic and feature-based. Both methods aim at determining the distance and direction to the goal location. Visual navigational algorithms using Scale Invariant Feature Transform (SIFT) techniques have gained great popularity in the recent years due to the robustness of the SIFT feature operator. There are existing visual homing methods that use the scale change information from SIFT such as Homing in Scale Space (HiSS). HiSS uses the scale change information from SIFT to estimate the distance between the robot and the goal location to improve homing accuracy. Since the scale component of SIFT is discrete with only a small number of elements, the result is a rough measurement of distance with limited accuracy. We have developed a visual homing algorithm that uses stereo data, resulting in better homing performance. This algorithm, known as Homing with Stereovision utilizes a stereo camera mounted on a pan-tilt unit, which is used to build composite wide-field images. We use the wide-field images coupled with the stereo data obtained from the stereo camera to extend the SIFT keypoint vector to include a new parameter depth (z). Using this information, Homing with Stereovision determines the distance and orientation from the robot to the goal location. The algorithm is novel in its use of a stereo camera to perform visual homing. We compare our method with HiSS in a set of 200 indoor trials using two Pioneer 3-AT robots. We evaluate the performance of both methods using a set of performance metrics described in this paper and we show that Homing with Stereovision improves on HiSS for all the performance metrics for these trials. | ||||||||

| Added: | ||||||||

| > > | In current work we have modified the HSV code to use a database of stored stereoimagery and we are conducting extensive testing of the algorithm. | |||||||

Ghosthunters! Filtering mutual sensor interference in closely working robot teams | ||||||||

Revision 22016-05-15 - FengTang

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab | ||||||||

Revision 12014-03-18 - DamianLyons

| Line: 1 to 1 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Added: | |||||||||||

| > > |

Overview of Research Projects in Progress at the FRCV Lab

Getting it right the first time! Establishing performance guarantees for C-WMD autonomous robot missionsIn research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission.

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm. In this work we propose an approach, the Space-Based Potential Field (SBPF) approach, to controlling multiple robots for area exploration missions that focus on robot dispersion. The SBPF method is based on a potential field approach that leverages knowledge of the overall bounds of the area to be explored. This additional information allows a simpler potential field control strategy for all robots but which nonetheless has good dispersion and overlap performance in all the multi-robot scenarios while avoiding potential minima. Both simulation and robot experimental results are presented as evidence.

Visual Homing with StereovisionVisual Homing is a navigation method based on comparing a stored image of a goal location to the current image to determine how to navigate to the goal location. It is theorized that insects such as ants and bees employ visual homing techniques to return to their nest or hive. Visual Homing has been applied to robot platforms using two main approaches: holistic and feature-based. Both methods aim at determining the distance and direction to the goal location. Visual navigational algorithms using Scale Invariant Feature Transform (SIFT) techniques have gained great popularity in the recent years due to the robustness of the SIFT feature operator. There are existing visual homing methods that use the scale change information from SIFT such as Homing in Scale Space (HiSS). HiSS uses the scale change information from SIFT to estimate the distance between the robot and the goal location to improve homing accuracy. Since the scale component of SIFT is discrete with only a small number of elements, the result is a rough measurement of distance with limited accuracy. We have developed a visual homing algorithm that uses stereo data, resulting in better homing performance. This algorithm, known as Homing with Stereovision utilizes a stereo camera mounted on a pan-tilt unit, which is used to build composite wide-field images. We use the wide-field images coupled with the stereo data obtained from the stereo camera to extend the SIFT keypoint vector to include a new parameter depth (z). Using this information, Homing with Stereovision determines the distance and orientation from the robot to the goal location. The algorithm is novel in its use of a stereo camera to perform visual homing. We compare our method with HiSS in a set of 200 indoor trials using two Pioneer 3-AT robots. We evaluate the performance of both methods using a set of performance metrics described in this paper and we show that Homing with Stereovision improves on HiSS for all the performance metrics for these trials.

Ghosthunters! Filtering mutual sensor interference in closely working robot teamsWe address the problem of fusing laser ranging data from multiple mobile robots that are surveying an area as part of a robot search and rescue or area surveillance mission. We are specifically interested in the case where members of the robot team are working in close proximity to each other. The advantage of this teamwork is that it greatly speeds up the surveying process; the area can be quickly covered even when the robots use a random motion exploration approach. However, the disadvantage of the close proximity is that it is possible, and even likely, that the laser ranging data from one robot include many depth readings caused by another robot. We refer to this as mutual interference. Using a team of two Pioneer 3-AT robots with tilted SICK LMS-200 laser sensors, we evaluate several techniques for fusing the laser ranging information so as to eliminate the mutual interference. There is an extensive literature on the mapping and localization aspect of this problem. Recent work on mapping has begun to address dynamic or transient objects. Our problem differs from the dynamic map problem in that we look at one kind of transient map feature, other robots, and we know that we wish to completely eliminate the feature. We present and evaluate three different approaches to the map fusion problem: a robot-centric approach, based on estimating team member locations; a map-centric approach, based on inspecting local regions of the map, and a combination of both approaches. We show results for these approaches for several experiments for a two robot team operating in a confined indoor environment .

Older ProjectsThis includes the following projects that are temporarily on hiatus: - Spatial Stereograms: a 3D landmark representation - Efficient legged locomotion: Rotating Tripedal Mechanism - Cognitive Robotics: ADAPT. Synchronizing real and synthetic imagery.

-- (c) Fordham University Robotics and Computer Vision

| ||||||||||

Ideas, requests, problems regarding TWiki? Send feedback