Difference: FRCVPublicProject (7 vs. 8)

Revision 82019-01-17 - PhilipBal

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV Lab | ||||||||

| Changed: | ||||||||

| < < | TOAD Tracking: Behavioral Research of the Kihinasi Spray Toad | |||||||

| > > | TOAD Tracking: Automating Behavioral Research of the Kihansi Spray Toad | |||||||

| Added: | ||||||||

| > > |

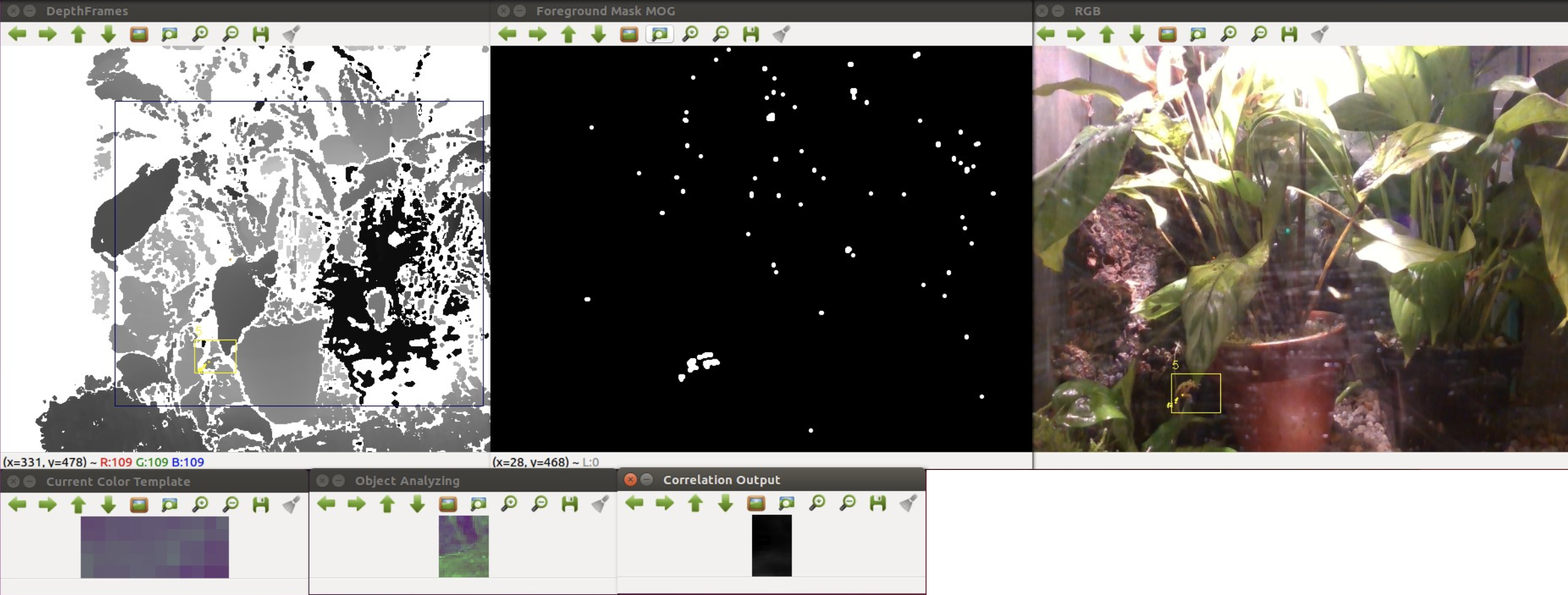

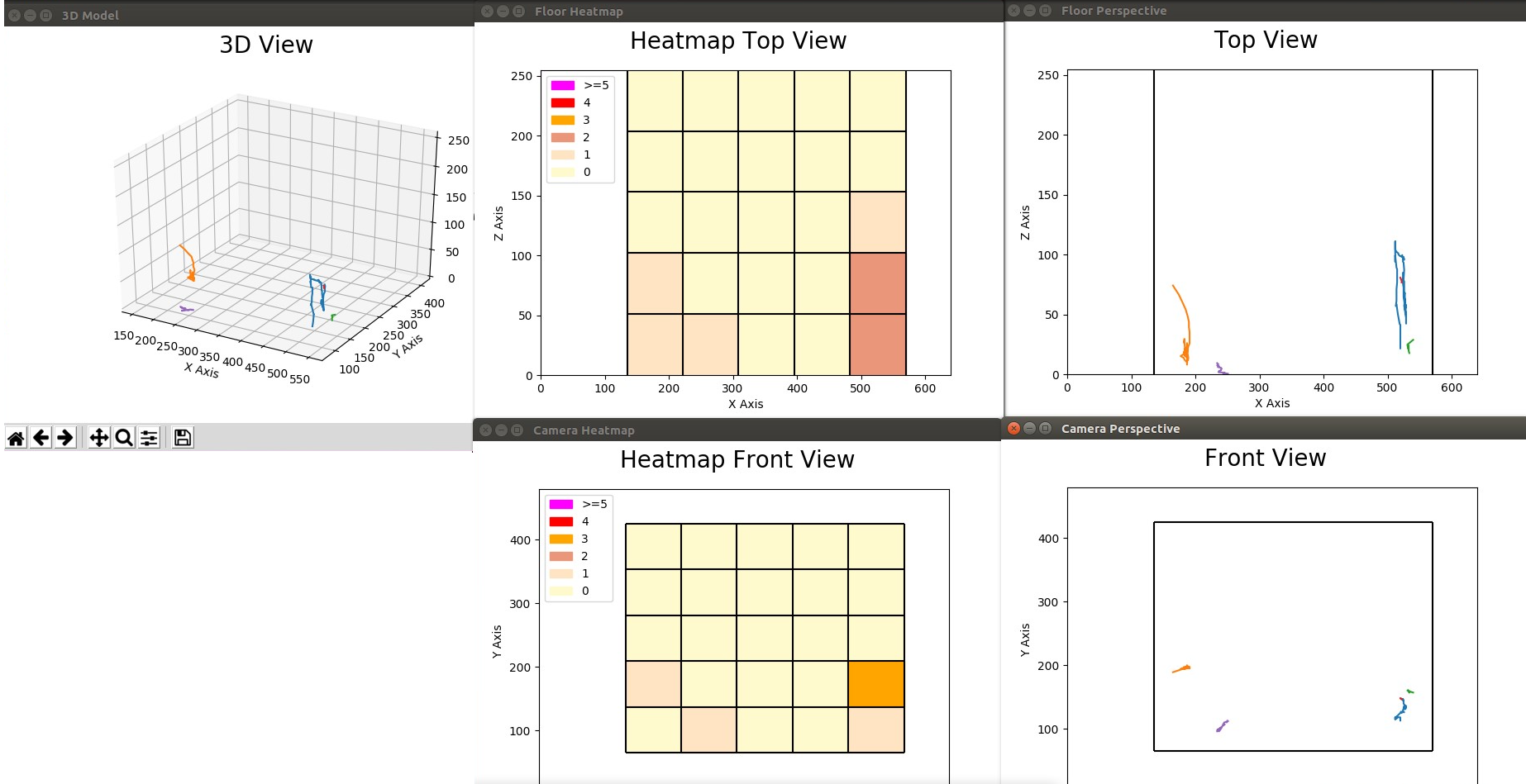

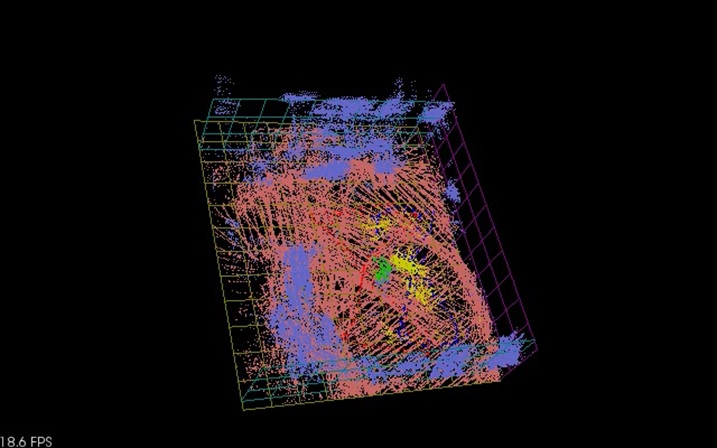

The Kihansi Spray Toad, officially classified as ‘extinct in the wild’, is being bred by the Bronx Zoo in an effort to reintroduce the species back into the wild. With thousands of toads already bred in captivity, an opportunity to learn about the toads behavior presents itself for the first time ever, at scale. In order to accurately and efficiently gain information about the toads behavior, we present an automated tracking system that is based on the Intel Real Sense SR300 Camera. As the average size of the toad is less than 1 inch, existing tracking systems prove ineffective. Thus, we developed a tracking system using a combination of depth tracking and color correlation to identify and track individual toads. Depth and color video sequences are produced from the SR300 camera. Depth video sequences, in grayscale, are derived from an infrared sensor and sense any motion that may occur, hence detecting moving toads. Color video sequences, in RGB, allow for color correlation while tracking targets. A template of the color of a toad is taken manually, once, as a universal example of what the color of a toad should be. This is then compared against potential targets every frame to increase the confidence a toad has been detected versus, for example, a leaf moving. The program detects and tracks toads from frame to frame, and produces a set of tracks in 2 and 3 dimensions, as well as 2 dimensional heat maps. For further details click here.

| |||||||

Getting it right the first time! Establishing performance guarantees for C-WMD autonomous robot missionsIn research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. We have developed a novel static analysis approach to analysing behavior based programs, coupled with a Bayesian network approach to predicting performance. Comparing predicted results to extensive empirical validation conducted at GATech's mobile robots lab, we have shown we can verify/predict reasltic performance for waypoint missions, multiple robot missions, missions with uncertain obstacles, missions including localization software. We are currently working on human-in-the-loop systems. | ||||||||

| Line: 29 to 37 | ||||||||

Ghosthunters! Filtering mutual sensor interference in closely working robot teams | ||||||||

| Changed: | ||||||||

| < < | We address the problem of fusing laser ranging data from multiple mobile robots that are surveying an area as part of a robot search and rescue or area surveillance mission. We are specifically interested in the case where members of the robot team are working in close proximity to each other. The advantage of this teamwork is that it greatly speeds up the surveying process; the area can be quickly covered even when the robots use a random motion exploration approach. However, the disadvantage of the close proximity is that it is possible, and even likely, that the laser ranging data from one robot include many depth readings caused by another robot. We refer to this as mutual interference. Using a team of two Pioneer 3-AT robots with tilted SICK LMS-200 laser sensors, we evaluate several techniques for fusing the laser ranging information so as to eliminate the mutual interference. There is an extensive literature on the mapping and localization aspect of this problem. Recent work on mapping has begun to address dynamic or transient objects. Our problem differs from the dynamic map problem in that we look at one kind of transient map feature, other robots, and we know that we wish to completely eliminate the feature. We present and evaluate three different approaches to the map fusion problem: a robot-centric approach, based on estimating team member locations; a map-centric approach, based on inspecting local regions of the map, and a combination of both approaches. We show results for these approaches for several experiments for a two robot team operating in a confined indoor environment . | |||||||

| > > | We address the problem of fusing laser ranging data from multiple mobile robots that are surveying an area as part of a robot search and rescue or area surveillance mission. We are specifically interested in the case where members of the robot team are working in close proximity to each other. The advantage of this teamwork is that it greatly speeds up the surveying process; the area can be quickly covered even when the robots use a random motion exploration approach. However, the disadvantage of the close proximity is that it is possible, and even likely, that the laser ranging data from one robot include many depth readings caused by another robot. We refer to this as mutual interference. Using a team of two Pioneer 3-AT robots with tilted SICK LMS-200 laser sensors, we evaluate several techniques for fusing the laser ranging information so as to eliminate the mutual interference. There is an extensive literature on the mapping and localization aspect of this problem. Recent work on mapping has begun to address dynamic or transient objects. Our problem differs from the dynamic map problem in that we look at one kind of transient map feature, other robots, and we know that we wish to completely eliminate the feature. We present and evaluate three different approaches to the map fusion problem: a robot-centric approach, based on estimating team member locations; a map-centric approach, based on inspecting local regions of the map, and a combination of both approaches. We show results for these approaches for several experiments for a two robot team operating in a confined indoor environment . | |||||||

| ||||||||

| Line: 52 to 58 | ||||||||

| ||||||||

| Changed: | ||||||||

| < < | <meta name="robots" content="noindex" /> | |||||||

| > > | <meta name="robots" content="noindex" /> | |||||||

| -- (c) Fordham University Robotics and Computer Vision | ||||||||

| Line: 60 to 66 | ||||||||

| ||||||||

| Added: | ||||||||

| > > |

| |||||||

View topic | History: r12 < r11 < r10 < r9 | More topic actions...

Ideas, requests, problems regarding TWiki? Send feedback