Difference: FRCVPublicProject (2 vs. 3)

Revision 32016-07-25 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projects in Progress at the FRCV LabGetting it right the first time! Establishing performance guarantees for C-WMD autonomous robot missions | ||||||||

| Changed: | ||||||||

| < < | In research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. | |||||||

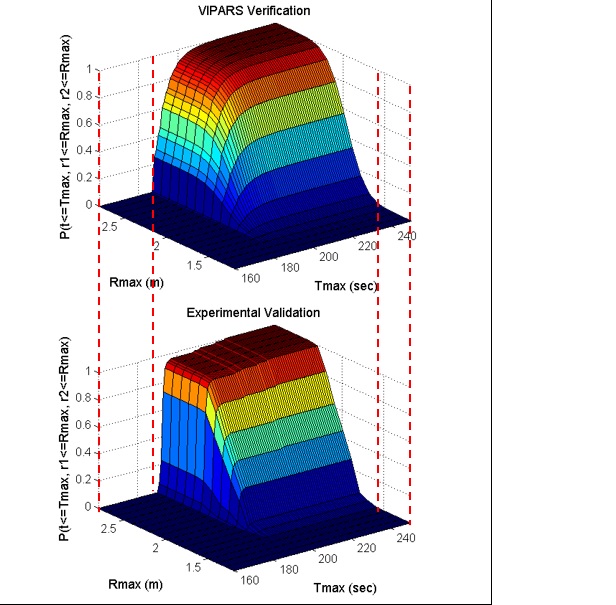

| > > | In research being conducted for the Defense Threat Reduction Agency (DTRA), we are concerned with robot missions that may only have a single opportunity for successful completion, with serious consequences if the mission is not completed properly. In particular we are investigating missions for Counter-Weapons of Mass Destruction (C-WMD) operations, which require discovery of a WMD within a structure and then either neutralizing it or reporting its location and existence to the command authority. Typical scenarios consist of situations where the environment may be poorly characterized in advance in terms of spatial layout, and have time-critical performance requirements. It is our goal to provide reliable performance guarantees for whether or not the mission as specified may be successfully completed under these circumstances, and towards that end we have developed a set of specialized software tools to provide guidance to an operator/commander prior to deployment of a robot tasked with such a mission. We have developed a novel static analysis approach to analysing behavior based programs, coupled with a Bayesian network approach to predicting performance. Comparing predicted results to extensive empirical validation conducted at GATech's mobile robots lab, we have shown we can verify/predict reasltic performance for waypoint missions, multiple robot missions, missions with uncertain obstacles, missions including localization software. We are currently working on human-in-the-loop systems. | |||||||

| ||||||||

| Added: | ||||||||

| > > | Multilingual Static Analysis (MLSA)Leveraging the static analysis work developed for DTRA, we are looking at multilingial (e.g., software that includes programs in several languages) to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. | |||||||

Space-Based Potential Fields: Exploring buildings using a distributed robot team navigation algorithm | ||||||||

| Line: 19 to 22 | ||||||||

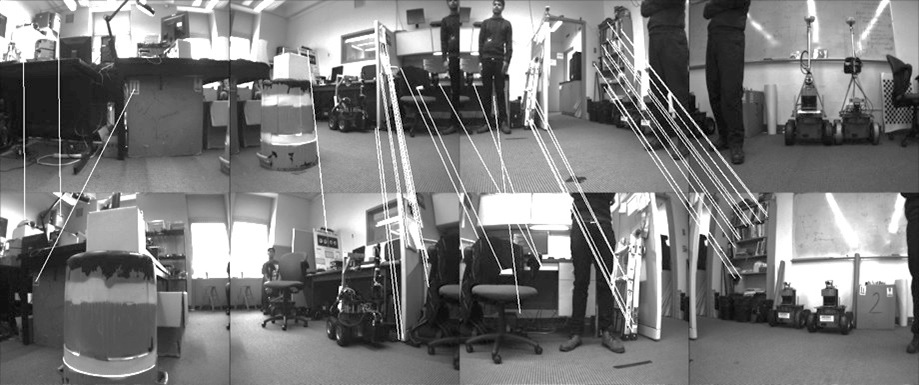

| Visual Homing is a navigation method based on comparing a stored image of a goal location to the current image to determine how to navigate to the goal location. It is theorized that insects such as ants and bees employ visual homing techniques to return to their nest or hive. Visual Homing has been applied to robot platforms using two main approaches: holistic and feature-based. Both methods aim at determining the distance and direction to the goal location. Visual navigational algorithms using Scale Invariant Feature Transform (SIFT) techniques have gained great popularity in the recent years due to the robustness of the SIFT feature operator. There are existing visual homing methods that use the scale change information from SIFT such as Homing in Scale Space (HiSS). HiSS uses the scale change information from SIFT to estimate the distance between the robot and the goal location to improve homing accuracy. Since the scale component of SIFT is discrete with only a small number of elements, the result is a rough measurement of distance with limited accuracy. We have developed a visual homing algorithm that uses stereo data, resulting in better homing performance. This algorithm, known as Homing with Stereovision utilizes a stereo camera mounted on a pan-tilt unit, which is used to build composite wide-field images. We use the wide-field images coupled with the stereo data obtained from the stereo camera to extend the SIFT keypoint vector to include a new parameter depth (z). Using this information, Homing with Stereovision determines the distance and orientation from the robot to the goal location. The algorithm is novel in its use of a stereo camera to perform visual homing. We compare our method with HiSS in a set of 200 indoor trials using two Pioneer 3-AT robots. We evaluate the performance of both methods using a set of performance metrics described in this paper and we show that Homing with Stereovision improves on HiSS for all the performance metrics for these trials. | ||||||||

| Added: | ||||||||

| > > | In current work we have modified the HSV code to use a database of stored stereoimagery and we are conducting extensive testing of the algorithm. | |||||||

Ghosthunters! Filtering mutual sensor interference in closely working robot teams | ||||||||

View topic | History: r15 < r14 < r13 < r12 | More topic actions...

Ideas, requests, problems regarding TWiki? Send feedback