Difference: FRCVPublicProject (11 vs. 12)

Revision 122022-12-31 - DamianLyons

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

Overview of Research Projectsin Progress at the FRCV Lab | ||||||||

| Added: | ||||||||

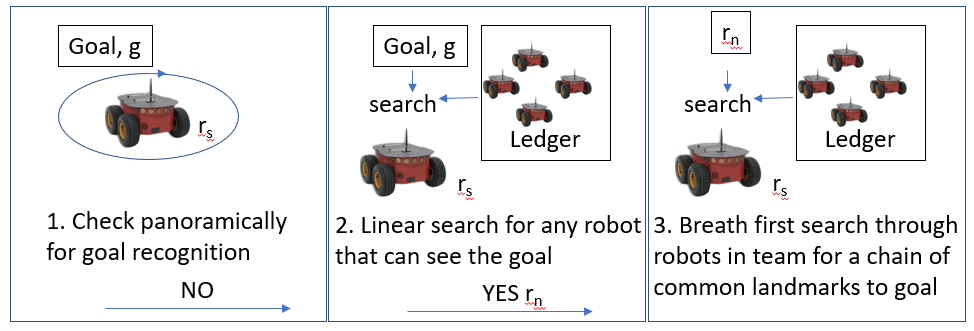

| > > | Wide Area Visual Navigation (WAVN)We are investigating a novel approach to navigation for a heterogenous robot team operating for long durations in an environment where there is long and short term visual changes. Small-scale precision agriculture is one of our main applications: High precision, high reliability GPS can be an entrance barrier for small family farms. A successful solution to this challenge would open the way to revolutionizing small farming to compete with big agribusiness. The challenge is enormous however. A family farm operating in a remote location, experiencing all the changes in terrain appearance and navigability that comes with seasonal weather changes and dramatic weather events. Our work is a step in this direction. Our approach is based on visual homing, which is a simple and lightweight method for a robot to navigate to a visually definied target that is in its current field of view. We extend this approach to work with targets that are beyond the current field of view by leveraging visual information from all camera assets in the entire team (and potentially fixed camera assets on buildings or other available camera assets). To ensure efficient and secure distributed communication between the team, we employ distributed blockchain communication: Team members regularly upload their visual panorama to the blockchain, which is then available to all team members in a safe and secure fashion. When a robot needs to navigate to a distant target, it queries the visual information from the rest of the team, and establishes a set of intermediate visual targets it can sequentially navigate to using homing, ending with the final target. For a short video introduction, see here.

We are also investigating the synergy of blockchain and navigation methodologies to show that blockhain can be used to simplify navigation in addition to providing a distributed and secure communication channel.

Key to the WAVN navigation approach is establishing an intermediate sequence of landmarks by looking for a chain of common landmarks between pairs of robots. We have investigated different ways in which robots can identify whether they are seeing the same landmark and we show that using a CNN-bsed YOLO to segement a scene into common objects followed by feature matching to identify whether objects are the same outperforms featrure matching on its own. Furthermore using a group of objects as a landmark outperforms a single object landmark. We are currently investigating whether object diversity in a group improves this even further.

For a short video introduction, see here.

We are also investigating the synergy of blockchain and navigation methodologies to show that blockhain can be used to simplify navigation in addition to providing a distributed and secure communication channel.

Key to the WAVN navigation approach is establishing an intermediate sequence of landmarks by looking for a chain of common landmarks between pairs of robots. We have investigated different ways in which robots can identify whether they are seeing the same landmark and we show that using a CNN-bsed YOLO to segement a scene into common objects followed by feature matching to identify whether objects are the same outperforms featrure matching on its own. Furthermore using a group of objects as a landmark outperforms a single object landmark. We are currently investigating whether object diversity in a group improves this even further.

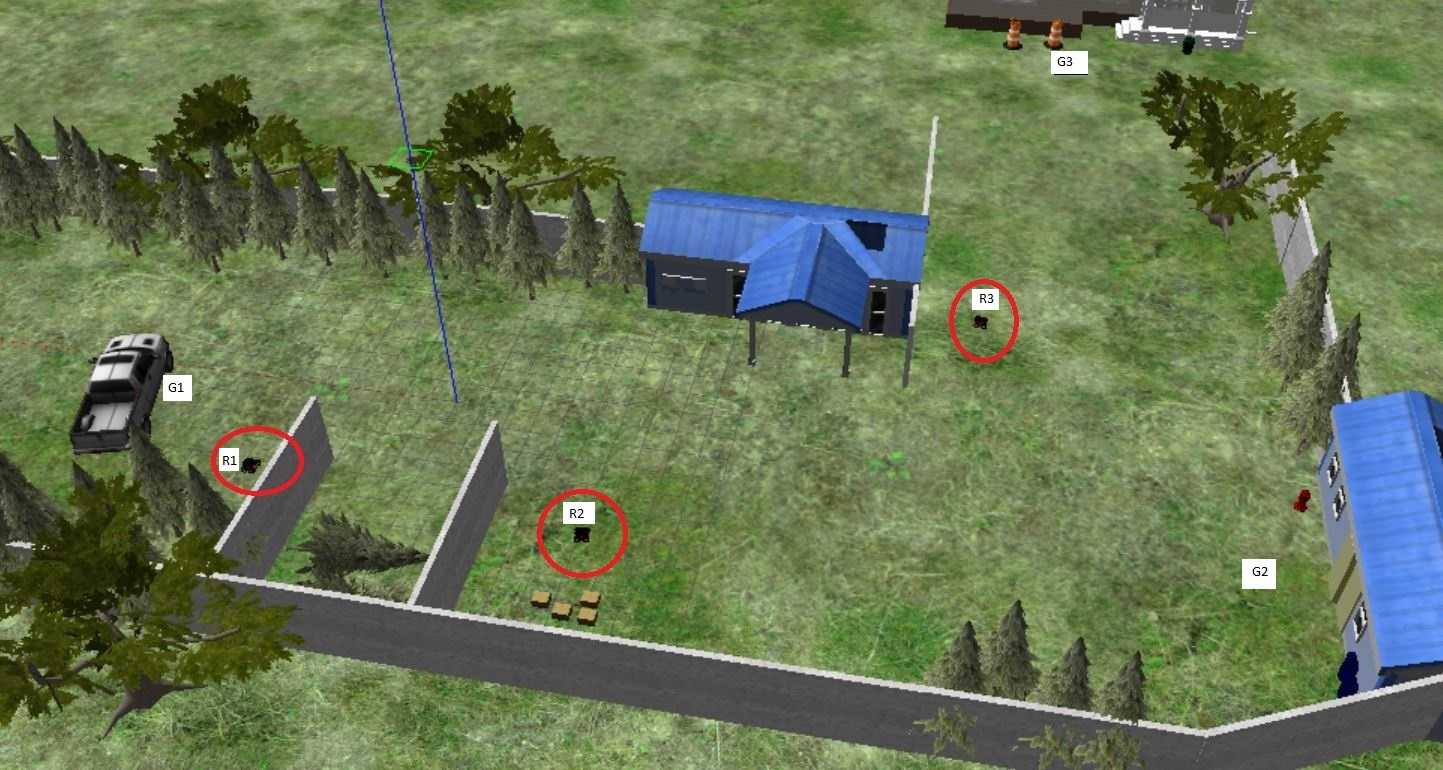

We have conducted WAVN experiments using grid-simulations, ROS/Gazebo simulations and lab experiments.using Turtlebot3 and Pioneer robots. The image above shows a Gazebo simulation of 3 pioneer robots in a small outdoor work area with plenty of out of view targets to navigate to. Our next step is to use WAVN for outdoor navigation to campus locations using a team of Pioneer robots.

We have conducted WAVN experiments using grid-simulations, ROS/Gazebo simulations and lab experiments.using Turtlebot3 and Pioneer robots. The image above shows a Gazebo simulation of 3 pioneer robots in a small outdoor work area with plenty of out of view targets to navigate to. Our next step is to use WAVN for outdoor navigation to campus locations using a team of Pioneer robots. | |||||||

Using Air Disturbance Detection for Obstacle Avoidance in DronesThe use of unmanned aerial vehicles (drones) is expanding to commercial, scientific, and agriculture applications, such as surveillance, product deliveries and aerial photography etc. One challenge for applications of drones is detecting obstacles and avoiding collisions. Especially small drones in proximity to people need to detect people around them and avoid injuring those people. A typical solution to this issue is the use of camera sensor, ultrasonic sensor for obstacle detection or sometimes just manual control (teleoperation). However, these solutions have costs in battery lifetime, payload, operator skill. Due to their diminished ability to support any payload, it is difficult to put extra stuff on small drones. Fortunately, most drones are equipped with an inertial measurement unit (IMU). | ||||||||

| Line: 9 to 27 | ||||||||

| We choose a small drone, the Crazyflie 2.0, as the experiment tool. The Crazyflie 2.0 is a lightweight, open source flying development platform based on a micro quadcopter. It has several built-in sensors including gyroscope, accelerometer etc. ROS (Robot Operating System) is a set of software libraries and tools for modular robot applications. The point of ROS is to create a robotics standard. ROS has great simulation tools such as Rviz and Gazebo to help us to run the simulation before conducting real experiments on drones. Currently there is little Crazyflie support in ROS, 4 however, we wish to use ROS to conduct our experimentation because it has become a de facto standard. More details here | ||||||||

| Changed: | ||||||||

| < < | Multilingual Static Analysis (MLSA) | |||||||

| > > | Multilingual Software Analysis (MLSA) | |||||||

Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | ||||||||

| Line: 103 to 121 | ||||||||

| ||||||||

| Added: | ||||||||

| > > |

| |||||||

Ideas, requests, problems regarding TWiki? Send feedback