Difference: FRCVPublicProject (10 vs. 11)

Revision 112019-09-18 - LabTech

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

| Changed: | ||||||||

| < < | Overview of Research Projectsin Progress at the FRCV Lab | |||||||

| > > | Overview of Research Projectsin Progress at the FRCV LabUsing Air Disturbance Detection for Obstacle Avoidance in DronesThe use of unmanned aerial vehicles (drones) is expanding to commercial, scientific, and agriculture applications, such as surveillance, product deliveries and aerial photography etc. One challenge for applications of drones is detecting obstacles and avoiding collisions. Especially small drones in proximity to people need to detect people around them and avoid injuring those people. A typical solution to this issue is the use of camera sensor, ultrasonic sensor for obstacle detection or sometimes just manual control (teleoperation). However, these solutions have costs in battery lifetime, payload, operator skill. Due to their diminished ability to support any payload, it is difficult to put extra stuff on small drones. Fortunately, most drones are equipped with an inertial measurement unit (IMU). The IMU can tell us the drone’s attitude and accelerations from the gyroscope and accelerometer. We note that there will be air disturbance in the vicinity of the drone when it’s moving close to obstacles or other drones. The data from the gyroscope and accelerometer will change to reflect this. Our objective is to detect obstacles from the aforementioned air disturbance by analyzing the data from the gyroscope and accelerometer. Air disturbance can be produced by many reasons such as ground effect, drones in proximity to people or wind gust from other sides. These situations can occur at the same time to make things more complicated. To make the experiment simpler, we just detect air disturbance produced from flying close to or underneath an overhead drone. We choose a small drone, the Crazyflie 2.0, as the experiment tool. The Crazyflie 2.0 is a lightweight, open source flying development platform based on a micro quadcopter. It has several built-in sensors including gyroscope, accelerometer etc. ROS (Robot Operating System) is a set of software libraries and tools for modular robot applications. The point of ROS is to create a robotics standard. ROS has great simulation tools such as Rviz and Gazebo to help us to run the simulation before conducting real experiments on drones. Currently there is little Crazyflie support in ROS, 4 however, we wish to use ROS to conduct our experimentation because it has become a de facto standard. More details here | |||||||

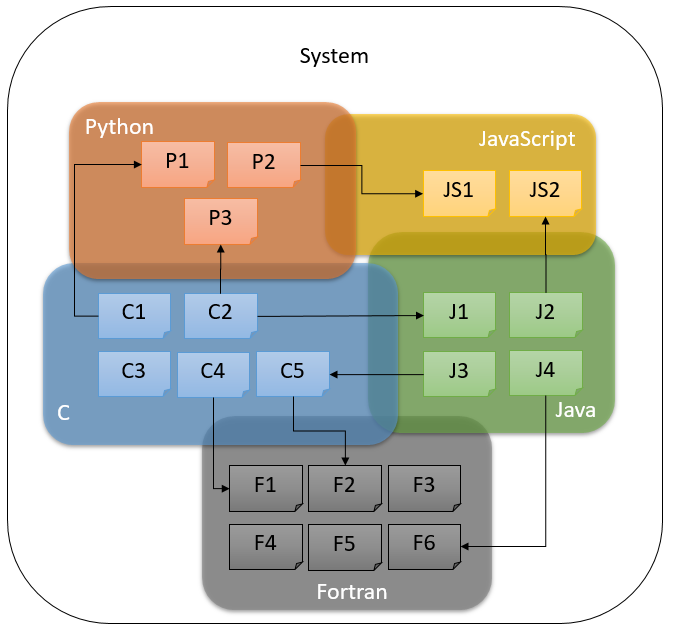

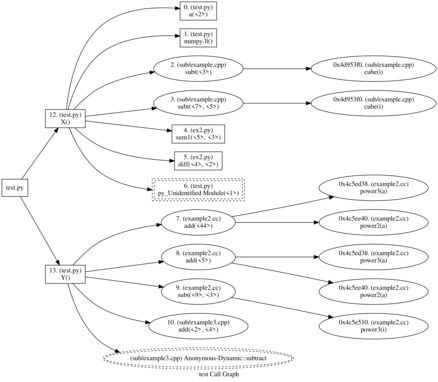

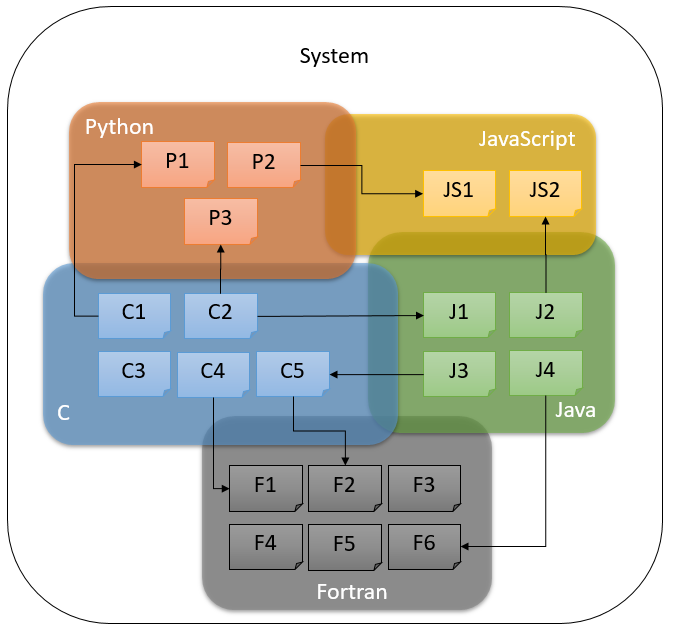

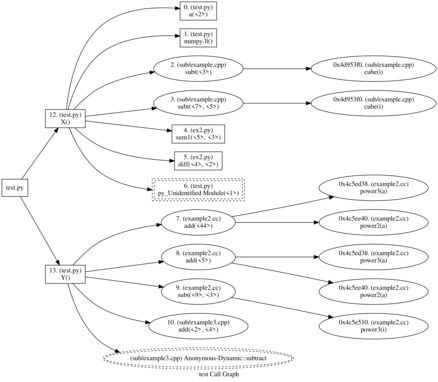

Multilingual Static Analysis (MLSA)Multilingual Software Analysis (MLSA) or Melissa is a lightweight tool set developed for the analysis of large software systems which are multilingual in nature (written in more than one programming language). Large software systems are often written in more than one programming language, for example, some parts in C++, some in Python etc. Typically, software engineering tools work on monolingual programs, programs written in single language, but since in practice many software systems or code bases are written in more than on language, this can be less ideal. Melissa produces tools to analyze programs written in more than one language and generate for example, dependency graphs and call graphs across multiple languages, overcoming the limitation of software tools only work on monolingual software system or programs. Leveraging the static analysis work developed for DTRA, we are looking at multilingual to provide refactoring and other information for very large, multi language software code bases. This project is funded by a two year grant from Bloomberg NYC. The objective of the project is to make a number of open-source MLSA tools available for general use and comment. For more details, see here. | ||||||||

| Changed: | ||||||||

| < < |    | |||||||

| > > |    | |||||||

TOAD Tracking: Automating Behavioral Research of the Kihansi Spray Toad

| ||||||||

| Line: 45 to 53 | ||||||||

Drone project : Crazyflie | ||||||||

| Added: | ||||||||

| > > | Drones are an exciting kind of robot that has recently found their way into commercial mainstream robotics. The most recent high-profile example of this is their appearance in the opening ceremonies of the 2018 Winter Olympics in Pyeong Chang, South Korea. Due to their epic appearance, the drones received high acclaim from the audience, solidifying the idea of using drones as performers in a public arena, capable of carrying out emotion-filled acts.

Beyond this example, our vision is to utilize drones, more specifically drone swarms, to not only perform theatrical performances, but also operate as a collective entity that can communicate and interact meaningfully with ordinary people in daily life activities. Our thesis is that drone swarms can more effectively impart emotive communication than solo drones. For instance, drone swarms can play the role of a tour guide at attractions or museums, to bring tourists on a trip through the most notable points at the site. In emergency situations that require evacuation of large crowds, drone swarms can help guide and coordinate the movement of survivors towards safe areas, as well as signaling first responders towards areas where help is needed the most. Advantages of Drone SwarmsThe main advantages of drone swarms over solo drones are the added dimensions of freedom. More specifically, with multiple drones, we can

Motivating ApplicationsEquipped with the ability to impart emotive messages, drone swarms could be used for crowd control and guidance, e.g.,

Technical ChallengesIn order to construct drone swarms that can communicate, interact with, and operate within the public space, we believe that the following technical challenges need to be addressed. | |||||||

| This project page is here

Older Projects | ||||||||

Ideas, requests, problems regarding TWiki? Send feedback